Late 70s and the 80s: Forget BASIC, we had Pascal and C

Update 2024-04-01: I’ve just learned, day after publishing this, that Niklaus Wirth, the creator of Pascal, has died on January 1st 🙁

+ added a link to a post about Turbo Pascal UI

+ updated the section about reasons to pass return values via registers

+ clarification that the BASIC variant we talked about previously was a certain implementation from the context of E.Dijkstra’s quote

+ added mention of UCSD Pascal, and that C was emerging in on 16-bits, not 8-bits (plus a link in the last section)

+ added a comment on efficiency of C or Pascal on 8-bits

Update 2024-03-06: Improved wording

Since the post about the state of BASIC as compared to popular languages at the time when Dijkstra made his hateful quote became the most popular post of this site (note to self: hate sells well, at least for 50 years), it’s time to pick up some loose threads that have been deliberately left as such. What were the other options? How good were they? Do we still have them? (What do they know? Let’s find out)

Disclaimer: I am describing the beginnings of these languages and their first versions — these things have been released and popular a few years before I was born. Please suggest edits if you see something incorrect!

I’ve covered the big ones (back then) that never died: PL/I, COBOL, Fortran and, of course, BASIC. But following up on the promise:

“These languages were not all that was on the market in the 1970s, but they were indeed the most popular ones! We will dig into the emerging alternatives separately.”… This is the time, and this time we’re playing open cards with no clickbait title. Almost.

The one we already met – BASIC

To recap the BASIC pros and cons post:

- BASIC, looking purely at the language, not as much as the enthusing environment it came in, had some disadvantages:

- No code structure (except being able to jump to a line of code with

GOSUBandRETURNfrom there) – no functions, procedures, all variables were global. - In the 70s, it didn’t even have

IF ... THEN ... END IF– you had toGOTOa line if the condition was met, like in assembly language. - When it was famously criticized, it didn’t support any user input, and had no graphics and sound support – because there was no monitor and no speaker.

- Once it gained this support, it wasn’t standarized, not all computers had equal access to their own capabilities from BASIC (sorry, C64, no

PLOTting a point or drawing aLINEfor you), many other keywords and their behavior differed as well. - When Dijkstra made the infamous comment, it was Darmouth BASIC and it was a compiled language (not as we know it from the home computers such as C64). But so was PL/I with all the procedures, local variables, and structures support.

- No code structure (except being able to jump to a line of code with

- But it also had its advantages:

- It was simple enough for all beginners, to help create all purpose programs (BASIC stands for Beginners All Purpose Symbolic Instruction Code). Just a few control keywords to learn, and you’re ready, you’ve mastered the language itself.

- Once home computers appeared in the 80s, it have a lot of access to the capabilities of the computer – disk, tape, graphics, sound, direct memory access, and direct hardware access (ok, I didn’t go into these features that deep, yet)!

- Thanks to its simplicity, it taught the users how the computer worked internally. Other languages hide the internals of how CPU and memory interact under many more levels of abstraction, but if you don’t have this abstraction, you discover and learn it yourself.

GOTOandGOSUBstatements are in fact same kind of jumps as the computer processor does internally. If your variables don’t have any structure in memory, you have to figure out how to manage them together.

The one that was entering the academia – Pascal

The title mentions Pascal – because the language was created by Niklaus Wirth “around 1970”, so it could have been something to compare to. However, to be historically correct, it wasn’t exactly built from scratch either. Pascal was losely based on Algol 60, that existed since the 60s. Algol 60 may look a little familiar, even if the name isn’t (sample src: wikipedia):

procedure Absmax(a) Size:(n, m) Result:(y) Subscripts:(i, k);

value n, m; array a; integer n, m, i, k; real y;

comment The absolute greatest element of the matrix a, of size n by m,

is copied to y, and the subscripts of this element to i and k;

begin

integer p, q;

y := 0; i := k := 1;

for p := 1 step 1 until n do

for q := 1 step 1 until m do

if abs(a[p, q]) > y then

begin y := abs(a[p, q]);

i := p; k := q

end

end Absmax

In the 70s, Pascal was getting popular in the “minicomputer” market. If you remember that a computer was a huge remote mainframe, you can guess that a mini computer is one that is smaller than a room, maybe at most 2 meters tall and a meter wide. And has reel-to-reel tape recorders built in.

Beginning in the 80s, and certainly throughout the 2000s as well, Pascal could be found at Universities. “So was BASIC”, you might remember from the previous article. But Pascal could serve a deeper purpose, than BASIC – instead of learning how to code, you could now learn how to design algorithms and data structures. This is a course on many Computer Science majors, but it’s also a book by Niklaus Wirth (Pascal’s creator) from 1985.

Let’s mention Edsger Dijkstra for the last time. In 1960 he published a paper called “Recursive programming“, defining what we have been referring to as “a stack” (like in “call stack”, but also “stack vs heap” – two main ways of storing variables in computer programs this day) for the next 64 years. As noted in the introduction of Algorithms and Data Structures, Dijkstra was also the author of the publication titled “Notes on structured programming” (1970), that shaped structured programming for decades, if not literal ages. . In this paper, Dijkstra presents foundational concepts and principles for writing clear, reliable, and efficient computer programs using structured programming techniques. The ideas put forth in this paper have had a lasting impact on the development of programming methodologies and have contributed to the establishment of best practices in software design and development.

Structured programming is a programming paradigm aimed at improving the clarity, quality, and development time of a computer program by making extensive use of subroutines, block structures, for and while loops, and other control structures. It promotes the idea of breaking down a program into small, manageable sections, making it easier to understand and maintain.

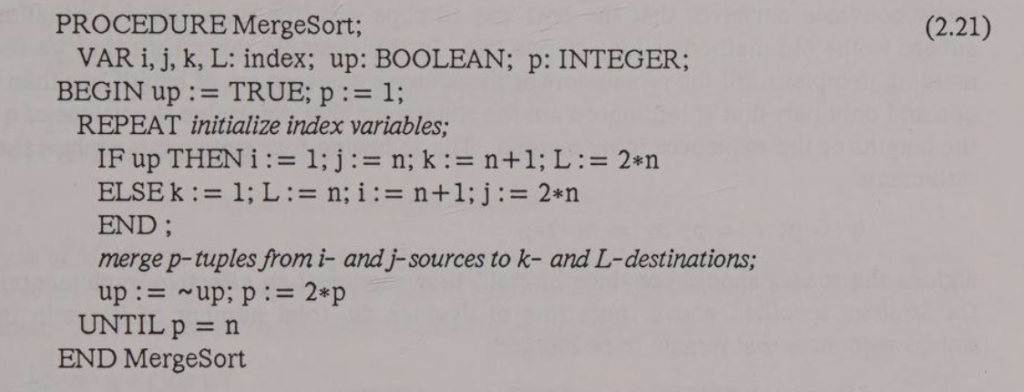

This gives us background on how Pascal was born, taught, and how it helped spreading the good practice of introducing proper level of abstractions in the code, as well as in the data. In Algorithms and data structures, we may find descriptions of most common problems to solve in any computer program, illustrated with samples of code in Pascal:

The above example from the book describes MergeSort sorting algorithm, in academically concise, probably not the most reader-friendly format. Trust me, using fixed-width font for computer code was not a thing yet, especially in serious publications, and syntax highlighting is a whimsical idea of the PC era.

Why did it make a difference?

The widespread popularity of Pascal had some advantages that are definitely worth noting here. It was designed to make code more comprehensible – both for the human, and the computer.

The human could benefit from encapsulating more complex logic into procedures or functions (in this distinction a function is a procedure that returns a value) that you can call from other places in the code, like we do in all modern programming languages – like padWithZeroes('123'). The code could be organized and formatted in a more human comprehensible way, with the core idea being nested blocks, that were nested both logically and visually (oh the memory-costly whitespace!).

The language encouraged defining your own types and subtypes for everything – which is a very good practice. For example (with uppercase still being the most common spelling for things quite often, but Pascal was not case sensitive):

TYPE

COLOR = (RED, YELLOW, BLUE, GREEN, ORANGE);

SCORE = (LOST, TIED, WON);

SKILL = (BEGINNER, NOVICE, ADVANCED, EXPERT, WIZARD);

PRIMARY = RED .. BLUE;

NUMERAL = '0' .. '9';

INDEX = 1 .. 100;In this example, when we create a subtype, such as for a NUMERAL, it clarifies for both the code reader and the compiler that only specific values are permissible. Similarly, the COLOR type would be an enumeration, as commonly defined with the enum keyword in many modern languages, specifying the range of potential literal values. This approach not only informs programmers about what to anticipate when reading the code, but also enables the compiler to prohibit misuse and the assignment of inappropriate values, or operations that are nonsensical for the given type. As a bonus, the compiler knowing the range of values could decide to allocate less memory for them.

Being a compiled language obviously also meant that software written in it would of course executed faster than written in an interpreted language – as by the time of execution, it’s already all machine code ready to run, without the extra step. For commercial software it also meant it’s hard to examine how it works internally, and hard to modify it – features that commercial software authors would usually desire.

Before we proceed to the last benefit: let’s state a difference between two similar ideas in code: function declaration vs definition.

In many modern languages, declarations are omitted, which makes it harder to see the difference. In essence, declaring a function or a variable we only tell the compiler that it exists — somewhere, and what it looks like, but we don’t spend a single byte on it yet.

Function definition, on the other hand, would actually contain the code required to implement it or, for variables, the data that is supposed to be associated with the variable.

For example a declaration of a hello world procedure in Pascal would look like:procedure SayHello;

and a variable declaration could be: var a: Integer;, usually grouped with other variables in the var block that declares all variables that are going to be used in the code block that follows.

while a definition of this function would look like:procedure SayHello;

begin

WriteLn('Hello, world!');

end;

and defining the variable would happen when we actually assign a value to it:a := 10;

Pascal is designed to enable fast compilation, even on small machines, and to be completed in a single pass by reading the entire source file just once. This introduced the requirement for all variables to be declared before use within each block, and for every procedure to be declared before it is called, which may be seen as inconvenient and outdated in modern programming practices. However, it was implemented to simplify and speed up compilation, reduce memory usage, and encourage programmers to organize their code more systematically.

While it may not be ideal for large functions, declaring variables close to where they are used (as preferred today) was not prioritized – in order to achieve these optimization goals.

The biggest advantage of Pascal being both taught, and popular in any kind of programming, was that there has been – and still is – a lot of resources to read about it from, and a lot of examples, and reference code.

I like being practical, where could I *use* Pascal?

I’m glad you ask! Let’s assume we’re in the 80s for now. The answer is everywhere. Even though the UCSD Pascal variant created by Unversity of California in San Diego is fading after some time of being the Pascal implementation (created in 1977, latest release in 1984), most computers had a version of Pascal of some sort. Amstrad CPC464 and ZX Spectrum had HiSoft Pascal, Atari had Kyan Pascal, Commodore had Pascal-64, Macintosh users remember Lightspeed Pascal.

Not to discredit UCSD Pascal, of course, as it could run on multiple computers (including big ones, like PDP-11 :)), and microcomputers with the most popular CPUs: Intel 8080, Zilog Z80, MOS 6502, Motorola 68000… And being able to share code for the same dialect mattered. Which brings us to a certain Pascal for CP/M…

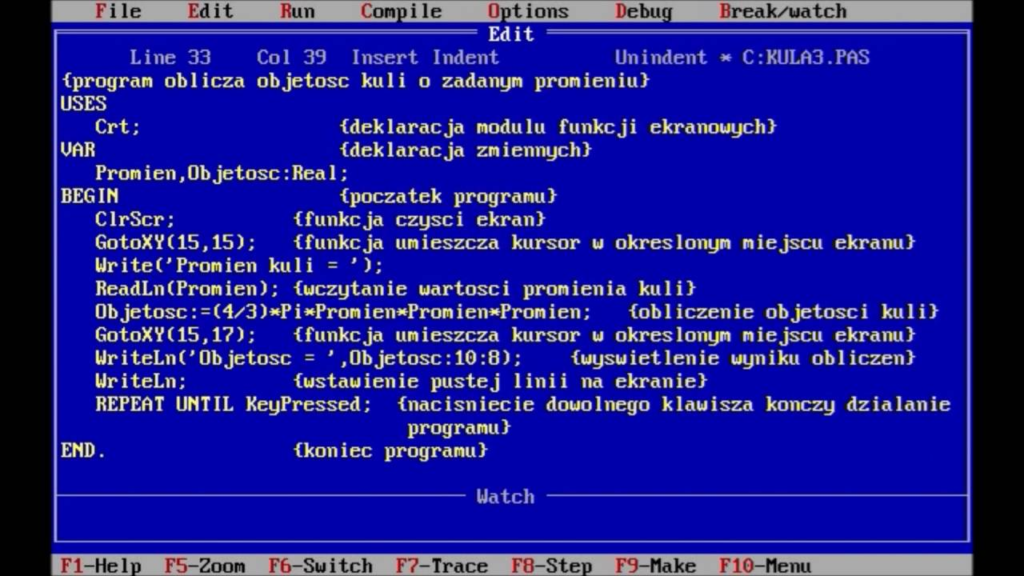

Thanks to the popularity of the CP/M system that could be shared by almost anything that was built with the Z80 CPU, any computer that could run it (Amstrad CPC, Spectrum +3, C128, Apple II with a Z80 card, MSX, IBM, Sam Coupe, TRS-80, and many others), could also run the most popular version of this language – Turbo Pascal by Borland (most known from the later editions for the IBM PC compatibles).

Above, we see a screen capture of Turbo Pascal 3.0 running under CP/M operating system on Amstrad CPC6128 – a machine with 4MHz (effectively “around 3.3 Mhz”) Z80 CPU and 128 KiB of RAM. 128KiB of RAM is enough here to hold the operating system, the compiler and editor, the source code, and the program being compiled, as well as the contents of the entire screen. You may notice that each byte of code produced is counted meticulously, and we stil had over 28KiB of free memory.

This particular Pascal compiler got so popular, many people confuse it with the name of the language (for the last 40 years). The tool, and the language, heve been evolving together, along with the hardware it could run on. While there isn’t a newer version for CP/M than 3.0, some later versions brough significant improvements:

- v4.0 introduced units, making it much easier to create even more modular code, where each unit could be compiled separately. It also introduced the full-screen editor it was loved for; it was one of the first IDE (Integrated Development Environments) to ever exist, where one full-screen interface allowed editing the code, compiling and debugging it.

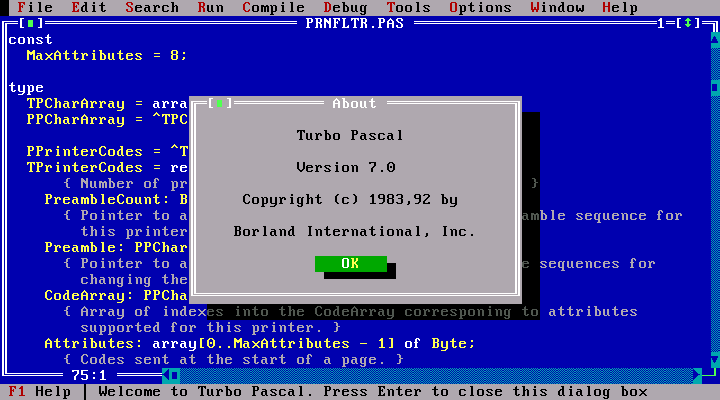

In v5.0 it became colorful with a familiar mix of blue, white and yellow:

(image courtesy of Adam Cichowicz, from his YT video about TP 5.0) - v5.5 introduced object oriented programming – from now on, one could use classes, the main way to encapsulate our business logic since 1989, that only JavaScript and Golang refuse to accept ;-).

A class is more or less what the name tries to convey – a product of classification of the ideas describing the world around us into a hierarchy of categories. A class of objects shares some properties – they may have the same set of attributes, or functionalities. Most standard example would be: we can distinguish the a class ofAnimalamong all objects, which may have sub-classes, such asDog,Cator, which comes as a shock to some (warning: TikTok link),Human. We can say that every animal “implements” (realizes the functionality of)EatandSleep, but onlyDogcanBark.

You can say thatDogandCatare subclasses ofAnimaland they inherit from it (meaning a property of the parent class is also a property of the child class).

I’m only half-joking here about JS and Golang. Most major languages support classes. JavaScript does object oriented programming a little differently – so-called inheritance is based on object instances directly, rather than the class. GoLang pretends not to have classes, but it has data structures that can have methods, excuse me, functions attached to them… which is effectively the same thing. - Turbo Pascal 7.0 introduced syntax highlighting in 1992. It was also the last text-based version of the interface, succeeded by Turbo Pascal for Windows, and later by Delphi.

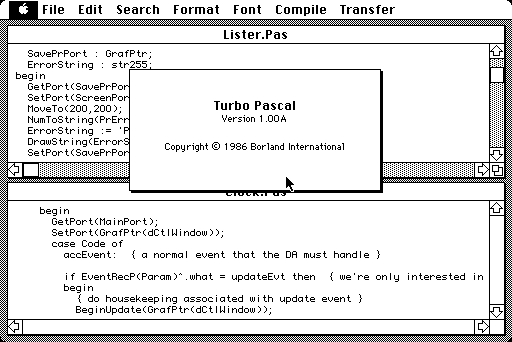

- Macintosh users enjoyed their Turbo Pascal for Macintosh 6 years earlier, in 1986! Winworld notes about it: “Turbo Pascal for Macintosh was a short lived port of Borland’s Pascal product to the Apple Macintosh. It featured a more advanced compiler than the DOS version at the time. It was an awkward time as Borland had previously been very critical of the under powered and closed Macintosh 128k architecture. While at the same time Apple had not been very supportive of third party development tools.”

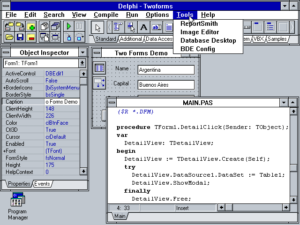

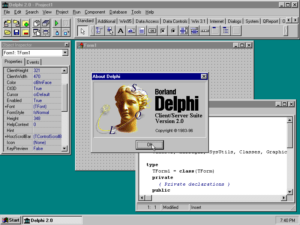

While it’s technically not Turbo Pascal anymore, and wasn’t released until 1995, it’s still Pascal – so I definitely should mention Delphi. It was a huge evolution from just writing Pascal for DOS or Windows (which required knowing how Windows actually works, how the windows and buttons are rendered, and how to communicate with the operating system properly), allowing very easy application development – requiring just to drop a button onto a window, and double-click it to write the code to execute when it’s clicked. To be honest, it’s often not even that easy today.

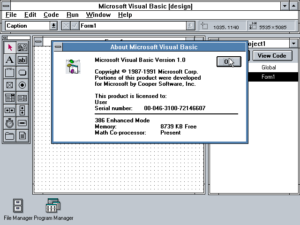

Delphi’s multi-window interface was extremely popular and became a standard on its own, even though it was not the first application to have it – it was heavily inspired by… Visual Basic from 1991! Nevertheless, for many programmers, the lightweight “last really fast version” Delphi 7 remained in use even 20 years after it got released (src).

I see C stands for Class

Except C, the language, does not support classes (reminder: C and C++ are not the same language). The name actually suggests an evolution from the language B created at Bell Labs in 1969.

But the language did exist in the 70s (kinda) and 80s! It was in development (first book documenting it to be published in 1978, and referring to Fortran and Pascal a lot), and was not yet a valid alternative to BASIC when the famous computer scientist was scolding BASIC, COBOL, Fortran and APL. Maybe that’s why he didn’t criticize it.

The original design of C and Pascal had different goals in mind. C was initially developed to implement the Unix operating system, with a focus on providing low-level access to memory and system resources, making it well-suited for system programming and developing operating systems. On the other hand, Pascal was designed as a language for teaching programming and software engineering principles, with a strong emphasis on readability, structured programming, and data structuring.

This difference is reflected in what both languages “feel like” and what they put emphasis on. Typically, C is “closer to metal” – for example there is no distinction between the char type that represents a single character, and a short int. C is perfectly happy with expressions such as int x = 'b'-1 or char c = 64. This is in line with what the CPU would do – a character was typically a single byte number, often in a single-byte register if operated upon, and it doesn’t matter to the computer, what it represents. Pascal aims for abstracting different meanings into different types, so even though for the processor wouldn’t need to know if it’s an 'a' or the number 97, the programmer should explicitly and unambiguisly use ord('a') to convert the letter to the ASCII code, or chr(97) to convert it back.

The most practical usage of this C trait would be converting a digit to its value with val = digit - '0'; – subtracting the ASCII code of the character 0 from the character of the input.

Similarly, we know that computers operate on 0s and 1s, so-called binary values (binary because there’s just two), representing true and false of some information. Naturally, Pascal therefore defines true and false as the two enum values for a boolean type, while C… decides there’s no bool type, and all that exists is 1 and 0. This is true even for values of operations such as comparison a == b – technically it either returns 1 or 0 in C, but not true or false. This too feels close to metal.

At the time, it was a common convention in assembly to pass the return value in the A (for 8-bit, later AX for 16-bit, and EAX for 32-bit) register of the processor.

(Why? One of the reasons is that it is faster than returning by a value in memory on most architectures even at the time, and in later years the use of registers to pass return values was sustained for avoiding concurrent memory accesss. By passing return values in registers rather than in memory, it allowed for safer and more predictable execution of functions, particularly in scenarios where functions might be interrupted and called again before completing their previous executions – and all register values are restored when resuming from an interrupt).

The first version of C didn’t even require specifying a return type for a function, but just assumed it’s int! This would be considered harmful by Pascal creators, and not specifying the return type is today considered a bad practice in C as well.

Let’s have a look on some very basic examples (no data structures):

/* 1978 C example for checking palindrome */

#include <stdio.h>

#include <string.h>

isPalindrome(char *str) {

int len;

int i, j;

len = strlen(str);

for (i = 0, j = len - 1; i < j; i++, j--) {

if (str[i] != str[j]) {

return 0; /* Not a palindrome */

}

}

return 1; /* Palindrome */

}

main() {

char testStr[];

testStr = "radar";

if (isPalindrome(testStr)) {

printf("%s is a palindrome\n", testStr);

} else {

printf("%s is not a palindrome\n", testStr);

}

return 0;

}while in Pascal we’d have:

(* Pascal example for checking palindrome *)

program PalindromeCheck;

function IsPalindrome(str: string): Boolean;

var

i, j: Integer;

begin

j := Length(str);

for i := 1 to j div 2 do

begin

if str[i] <> str[j - i + 1] then

begin

IsPalindrome := False; { Not a palindrome }

Exit;

end;

end;

IsPalindrome := True; { Palindrome }

end;

var

testStr: string;

begin

testStr := 'radar';

if IsPalindrome(testStr) then

writeln(testStr, ' is a palindrome')

else

writeln(testStr, ' is not a palindrome');

end.You can notice that C doesn’t bother using too many data types – for example while Pascal has string type, C uses a pointer to a character. The assumption is that subsequent characters follow right after this pointer, and the string ends where a 00 byte is found (so-called null-terminated string). The example also shows that char * is basically the same as an array of characters – the test string is declared as such. We also return an integer instead of a boolean.

In Pascal, functions have this interesting convention that didn’t catch on in many languages, that you can assign the result value to the function name. So once we do IsPalindrome := False;, it will be the result of the IsPalindrome call, unless updated later before exiting the function.

While both languages at the time separated variable declarations and usage, C had this nice feature of being able to initialize the variable with a value in its declaration (int l = strlen(s). The original Pascal language did not support that (but for example todays Free Pascal does). C’s stdio package also provided the extremely useful printf function where f stands for “format” – you can declare the entire message first, and the arguments to be inserted into it later. It makes a lot of string formatting much easier to read.

It’s also important to note that C started mostly on 16-bit machines, which existed in the 80s, but weren’t the ones you would find at home. So the regular home computer user, even if they wanted to get into compiled languages, would probably not see it as a popular option.

To this day, targeting 8-bit machines in C is considered suboptimal, and many optimizations are not there either.

C is for Change

Today, if you say “it’s written in C”, it’s not the same language that Ritchie and Kernighan would use. To be frank, what their standard suggested is often not allowed by the compilers used today.

Like many languages, C has been standarized, as well as it evolved. The first major change came with the ANSI C standard (C89), which was completed in 1989 and ratified as ANSI X3.159-1989. This version of the language is often referred to as “ANSI C”, or C89, and brought about substantial changes to the language, such as introduction of new features like volatile, enum, signed, void, and… const.

Subsequent standards, including C99 and C11, introduced additional features and modifications to the language, further expanding its capabilities and refining its syntax and semantics.

Some changes were adopted from the language that intended to improve upon C, namely C++: programmers were finally free to declare functions anywhere in the code (mix declarations with executed statements), as the compiler had much more memory to use now, C99 also finally brought the bool type to the language! Another ported feature that you’d expect to already be there was the ability to use rest-of-line comments, so: // comment.

Pascal vs C recap

Most other C features aim more at making it easy to write – a lot of shortcuts, abbreviations, shorter and less verbose operators (like i++ instead of i = i+1), mixing types that are represented identically internally by the CPU.

While C is super concise, the same sequence of characters can mean different things depending on the context, for example a * b can mean both “multiply a times b” if it’s a statement (like result = a*b), or “a is a pointer to variable of type b” (more commonly written as a *b), if it’s a variable or argument declaration.

Pascal went the other way, trying to make sure the code is easy to read without having to analyze it much. The structure of the code was clearly visible, types were encouraged to be defined as closely to their meaning as possible.

The statements from the a*b example above are unambiguous: either it’s a multiplication written as result := a*b; or a variable being a pointer, declared as var a ^b; – both the human, and the single-pass compiler don’t have to check anything else.

Seems like after 8 years of having Pascal, a need to type code faster emerged ;). For further reference, there’s an entire Wikipedia entry comparing the two languages under many more aspects.

While these languages make writing more organized programs easier, the most efficient ones for the 8-bits were, and often still are, written directly in assembly. User retrac in one of the comments has some nice insight on this:

Languages like C and Pascal really demand that you have a nice, cheap stack. Classically, a function call means pushing all the parameters to the stack, and then the return address, and then jumping to the function, then pulling all the parameters off the stack, and pushing the return value on the stack, then modifying the stack pointer to drop the parameters, and then reading the return address off the stack.

Neither the Z80 or 6502, have instructions that provide an efficient stack, which works with multi-byte data. You end up constantly manipulating the stack pointer (usually for a software-implemented stack) with slow 8-bits-at-a-time arithmetic. Painful.

The PDP-11’s instruction set in comparison, provides not just one, but up to seven, flexible 16-bit stacks.

Come Forth

Another language not mentioned in the “Was BASIC that horrible…” post that actually had huge significance in that era of computing is Forth.

: isPalindrome ( addr -- flag )

dup >r \ Duplicate the address and move one copy to the return stack

bounds ?do \ Iterate over the string

i c@ r@ - \ Calculate the address of the corresponding character from the end

dup i c@ <> if \ Compare the characters

drop r> drop false exit \ Not a palindrome, cleanup and return false

then

loop

drop r> drop true \ Palindrome, cleanup and return true

;

As you can see, it’s very different from all the languages discussed in this and previous post. I’m not a Forth expert. But I can pinpoint the main differences and mention, why it made sense to use it. I’ll also share some further reading links for the curious.

First and foremost, in contrast to Pascal’s structured approach and C’s procedural paradigm, Forth offers a different programming model based on a stack-oriented execution and a minimalistic syntax. This means that arguments for each operation were pushed on the stack (same stack as we take the RETURN address from, described in the Recursive Programming document). The operation itself is then the last part of the command. A simple operation such as: 1 + 2

would be therefore written as: 1 2 +.

This is what we know as the “Reverse Polish Notation” or “Reverse Łukasiewicz Notation“. It allows the compiler (or interpreter) to go through code word by word, having no memory and no expectations, and look at the consequent elements: “is this a command? no? then push it on stack. Is it? Execute it, it will consume the arguments from the stack”.

Via “Lost at C? Forth may be the answer“:

When you write C = A + B, the compiler puts the “equals” and “plus” operations on its pending list until it gets to the end of the expression. Then, it rewrites it as “Fetch A, Fetch B, Add, Store C”.

Forth cuts out the middle step. In Forth, you write the same operation as: A @ B @ + C !.

The @ and ! are Forth’s shorthand for the “fetch” and “store” operations. The + , oddly enough, represents addition.

This means that Forth is extremely memory and CPU efficient to execute – and let’s remember that CPUs were slower and memory was very expensive back then. This lets us find a sentence in the document quoted above that points out something we would take for granted today:

It’s often possible to develop a Forth program on the target system itself.

http://www.forth.org/lost-at-c.html?locale=en

It wasn’t always that easy to develop a program and run it on the same machine. This was not only due to the inconveniences mentioned earlier (such as switching between editor, compiler, and the created program), but also because of technical limitations. Compiling software requires loading a significantly larger amount of data into memory than the resulting program will contain. This is due to reasons as simple as the source code being larger in bytes than the compiled program (what is one line of code for a human can be three bytes for the target machine). Additionally, in order to resolve all references (such as variable names, function names, and types), they all need to fit in memory and be searchable in a reasonable amount of time.

For the same reason, if you want to target a CP/M system, an Amstrad, an Apple II, a Commodore 64, or a ZX Spectrum (and so on…) today, you would most likely try cross-compilation (most likely with C though) – write the code on a more powerful and resourceful PC, and only run it on the target platform.

Last but not least, going back to the language – Forth also allows defining own keywords, and this extensibility allows developers to create other, usually domain-specific languages and tailor the language to specific applications.

Is it still in use? Yes!

Forth is still in use by IBM, Apple and Sun. It is used for device drivers, especially used during booting of OS. FORTH is very useful on microcontrollers, as it uses very little memory, can be fast, and easier to code than in assembly, even interactively.

https://stackoverflow.com/questions/2147952/is-forth-still-in-use-if-so-how-and-where

Today

The most common question to answer here would be “(why) are C and Pascal not used today?”.

The answer, if we check without prejudice, is “they are used”.

Based on recent discussions and developments, it seems that there is a growing trend to consider replacing C and C++ with Rust in certain domains. Rust is being praised for its focus on performance and safety, which are areas where the C family has historically prioritized speed over security. Some sources suggest that Rust is gaining traction and could potentially replace C++ in certain applications, but it’s important to note that C (no ++) still actively used in many areas, especially in “close to the metal” development where fine control over operations and memory management is crucial.

The Linux kernel is written in the C programming language. In the near future, however, probably more and more of Linux and Windows kernel will be rewritten in Rust.

While it may not be as prevalent in modern software development compared to languages like Python, Java, or C++, Pascal‘s was significant and is still in use in certain area, so it has not completely disappeared from the programming landscape. However it’s not adapting too fast to the ever-changing competition and not incorporating new competetive features. Or at least it’s not in the news.

The language Object Pascal has been renamed to Delphi – Delphi remains an expensive commercial Rapid Application Development tool. It supports writing code for both PCs and mobile devices, as well as multi-device (Windows, macOS, Linux, iOS and Android) applications with their FireMonkey engine.

A free Delphi Community Edition exists.

In my opinion, this is one of the greatest harms that happened to the language – it became too associated with a single company.

In my opinion, this is one of the greatest harms that happened to the language – it became too associated with a single company, with propriertary IDE and blocking price tag, that it killed its popularity.

Ever since I remember, buying a license for Delphi costed 3-5x more than buying a similar license for Visual Studio – and that’s long before free Visual Studio Express, not to mention open source Visual Studio Code (github).

The most mainstream Pascal was historically most driven by Borland (which later changed its name to Inprise). Subsequently, it was owned by Embarcadero Technologies, the current developer and maintainer of Delphi. Free alternatives like Free Pascal (also name of the language; Free Pascal remains free (as in free speech)) or the IDE for it, Lazarus, mentioned below, tried to catch up, but it’s hard to match the commercial size, development speed, and market that a well funded project like Delphi has/had. Free Pascal and Lazarus are therefore still alive and well, but don’t evolve faster or further than the commercial line of IDEs did.

Castle Game Engine is written in Pascal and actively developed – https://castle-engine.io/

Lazarus is the free Delphi-like IDE for Free Pascal. Last release was a week ago.

MAD Pascal is a cross-compiler – a 32-bit compiler for a PC to target Atari XL/XE.

ADA

Pascal spawned one more language! Named after the first programmer ever, countess Ada Lovelace, the Ada language is not as known as it should be, but it sure is a solid modernized descendant of Pascal, for different use-cases.

Ada, created in the late 1970s, with first implementation published in 1983, is defined as “extremely strong typed” and “supporting design by contract (DbC)”, but I would also argue that calling it “paranoid” is fair. If a program in Ada compiles at all, it probably already handles all edge cases.

Some Ada features are very remarkable today – explicit concurrency, tasks, synchronous message passing, protected objects, and non-determinism.

It had so-called generics (generic/parametrized types) since it was designed in 1977, and was the first language to popularize them. C++ added them in 1991, 14 years later. Golang, a much newer language, added them in 2022, 45 years later.

Ada also had built-in syntax for concurrency, something that was a luxury (who had multiple CPUs in 1977?), and now is one of the core aspects of improving software performance (“everybody” has at least 6 cores in 2024).

The Ada programming language was initially designed following a contract from the United States Department of Defense (DoD) from 1977 to 1983 to supersede over 450 programming languages used by the DoD at that time. Over the past 30 years it has become a de facto standard for developers of high-integrity, military applications. It is designed specifically for large, long-lived applications where reliability, efficiency, safety and security are vital.

https://www.adacore.com/industries/defense

While the language is your best friend if you want to code software for your nuclear reactor (example in Czechia, the document “Verification and Validation of Software Related to Nuclear Power Plant Instrumentation and Control” also mentions “Use of modern real time languages such as ADA and OCCAM.” as the best starting point to define such verification and validation formalized description) or your military systems (quote above) it never got as much popularity as many others.

The syntax and learning curve for Ada may be perceived as steeper compared to other languages, which can deter some developers.

The language, as usual, is not dead. The last spec in from 2022.

It also seems like languages that use more and longer words are falling out of favor in times when everybody is in a rush.

begin and end are less preferred than { and }procedure and function are a lot of letters. Other languages do func, fn or even nothing at all (like (args) => body in modern JS).

The observation above relates to human languages just as much as to programming ones.

Dig deeper: links and media

Niklaus Wirth’s Algorithms and Data Structures on Archive.org – can be borrowed

Why Forth? – a post on Reddit

History of C on cppreference.com

Ada 2022 Language Reference Manual.

The IDEs we had 30 years ago… and we lost – a recent and interesting post about the text interfaces of Turbo Pascal and similar!

Some nitpicks:

* BASIC was not a “compiled language” in the modern sense in most popular implementations. e.g. Commodore BASIC transformed the input text by replacing keywords with bytecode tokens, but this could be trivially reversed to recover the original source code.

* For Pascal, you might want to mention the very popular UCSD Pascal implementation.

* While Ada was in much more widespread use (partially due to government mandates), parametrized types were introduced some years earlier in CLU.

Totally agreed – BASIC in the home computing era was interpreted. But the BASIC that Dijkstra and the previous post were talking about *was* however compiled. I think I may not have expressed the context (recap of previous post).

I’ll read a bit about UCSD Pascal, it does seem to have been one of the most important dialects before Turbo took the market; and I’ll update the article 🙂

Thank you, appreciated!

Nice and comprehensive article. I think that good compiler have the job of optimizing the code without sacrificing safety, and pascal have much more oportunities to do so safely (replacing i:=i+1 with inc’s for example, using registers for variables etc) then c, due to the language design. I also think that it is a pitty that multiple assignment from dijkstra’s ideas have not been adopted… Thanks for the article anyway…

In the early 80s, I was not a professional programmer, but I wrote a lot of code. Some on other computers, but mostly on PCs, Microsoft basic and assembler. I learned early on that assembler programmers create a large library of re-usable code, often that creates tools found in higher order languages. It’s still a pain to write and debug. I saw an ad for Turbo Pascal in Byte I think, maybe in 1984 or so. I couldn’t believe how easy it was to learn and use, with speed pretty close to assembler. Later versions allowed the use of assembler code inside the Turbo Pascal source, in hexadecimal, so I had tools for assembling my code and converting it to a format suitable for inclusion in Turbo Pascal. I still have the IDE keystroke implanted in my brain.