Was BASIC that horrible or… better?

Everything was fine until BASIC entered the picture.

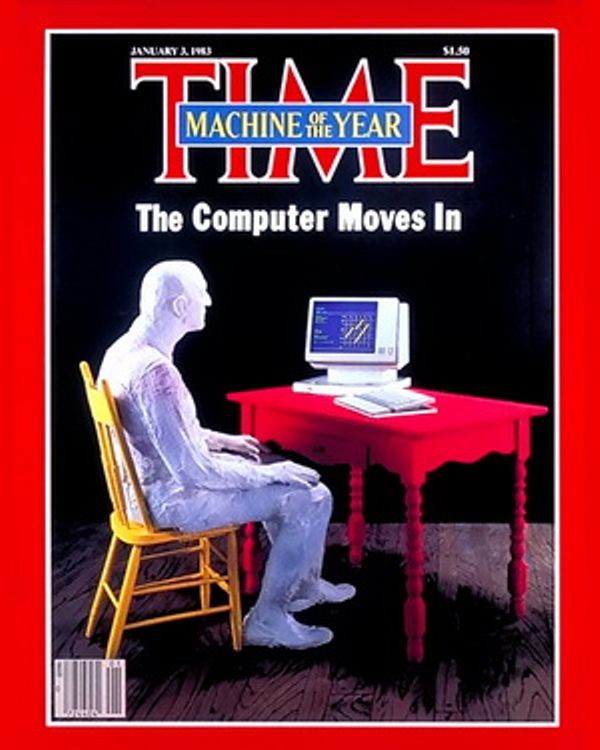

Edsger Dijkstra in a hallucination of 2023’s AI

Simplicity is the ultimate sophistication.

Leonardo Da Vinci

It is practically impossible to teach good programming to students that have had a prior exposure to BASIC: as potential programmers they are mentally mutilated beyond hope of regeneration.

Edsger Dijkstra,

How do we tell truths that might hurt?, 18 June 1975

Selected Writings on Computing: A Personal Perspective, Springer-Verlag, 1982. ISBN 0–387–90652–5.

Last edit: 2024-01-19.

The article was posted to HackerNews on 2023-12-23 🙂

2023-12-24: Updated the mentions around Fortran reference – the 1976 example was too modern and not the standard at the time. Added a paragraph on why did BASIC still use line numbers in the 80s. Added mention of Python as contemporary alternatives, and an ending note that the language wasn’t the only factor for the ones who started programming in BASIC on home computers.

2023-12-28: corrected links mentioning HN comments to point to the comments, not the profiles.

2024-01-19: added BBC Microbot examples now that the gallery is back!

BASIC was responsible for sparking the interest in computers for a generation (to quote glimshe’s a comment). And yet Edsger Dijkstra, a renowned computer scientist, famously made this last controversial statement in bullet-point list of inconvenient truths that he published in 1975. Dijkstra was known for his strong opinions on programming languages, and he believed that the simplicity and lack of structured programming principles in BASIC could hinder students from developing a strong foundation in programming.

If we dig into what reality caused this statement, which made me felt personally attacked at some point ;), to be born, it’s a pretty fascinating rabbit hole (as everything in computing is, if you stay curious). And to separate that personal feeling from facts, let me assure you that I will clearly separate facts from opinions in this kind of posts!

What’s the context of the famous quote?

This Dijkstra’s opinion was so strong and caused so much offense, that today the Generative Pre-trained Transforms seem to associate him with enough hatred for the language to imagine quotes such as “Everything ws fine until BASIC entered the picture”, something he never actually said.

However, one could argue that the second quote is contradictory, as the language BASIC is itself, for the same reason, quite simple. That should make it a much better tool, allowing the programmer user to focus on the goal. Like modern crippled Golang ;-). Does it mean BASIC is too simple? Were all the popular alternatives better?

It is important to note that Dijkstra’s statement was made in a different era, when BASIC was one of the few widely accessible programming languages. Today, there are newer, more powerful programming languages and resources available that can help students develop strong programming skills, regardless of their prior exposure to BASIC, supporting the fact that it was a hyperbole used to prove a point.

The statement was also just a bullet point in a bigger list of similarly negative declarations, such as “PL/I -“the fatal disease”– belongs more to the problem set than to the solution set.” (remember? PL/I was the language behind Multics, a project that – by failing to meet the schedule – gave us Unix) or, much harder, “The use of COBOL cripples the mind; its teaching should, therefore, be regarded as a criminal offense.”.

Going back to “is it too simple?” question, we can look at BASIC evolution in three eras:

- The 70s, when it made him so resentful

- The 80s and 90s, when his statement made home computer users so resentful

- BASIC today

BASIC throughout the years

The 70s

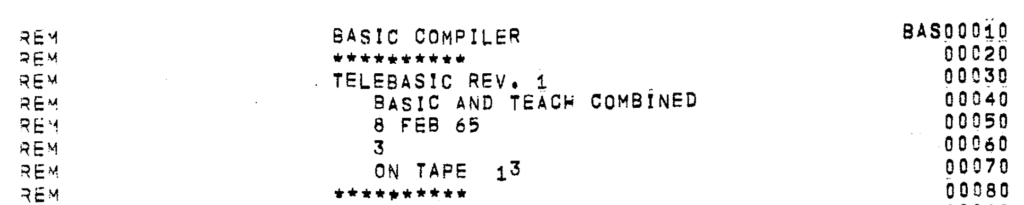

The flavor of BASIC most likely criticized by Dijkstra is Dartmouth BASIC, the original (!) version of the BASIC programming language, from 1964. According to Wikipedia, it was designed by two professors at Dartmouth College, John G. Kemeny and Thomas E. Kurtz. With the underlying Dartmouth Time Sharing System (DTSS), it offered an interactive programming environment to all undergraduates as well as the larger university community.

It wasn’t the BASIC most older computer users worldwide know. In fact, it wasn’t even an interpreted language, but a compiled one!

A note to the beginner:

Compiled languages, like most, but not all, of the ones popular today (C, C++, Go, Rust) are those where a program cannot be directly executed after it was written. Instead, it has to go through the process of checking all the references between files and modules (including answering questions such as: where do they come from? do they exist? are all the values the correct type?), translating each instruction into a set of lower-level instructions directly executable by the computer’s main processor. This process is known as compilation. The process can take a while, from a few seconds for moderate size code base, to many hours (Chromium, the core part of Chrome browser, would take 6-8 hours to compile on a 4-core machine).

This means a few things – first of all, it’s always a two-step process, not as interactive as running commands one by one. You have to wait for the program to check all the code for syntax errors and compile first, which comes with the benefit of basic (sic!) error check before you even execute the code.

Interpreted languages, or rather programming language interpreters are the other way to execute code. Here, traditionally each instruction from the source code is translated to executable machine code only as the execution reaches that particular instruction.

This, in turn, means some errors may go unnoticed until we reach a given point in the code, such as referencing a variable that doesn’t exist, or is of a type incompatible with the way we want to interact with it. A common benefit, however, is that interpreted languages are usually less strict about the type of a variable, and most constructs can operate just as happily with integer numbers, as with floating point (the ones with the fractional part), or even dynamically adapt to when it’s a string (text). Python, for example, extends this concept to all objects with an idea called if it looks like a duck and quacks like a duck, then it probably is a duck. And you can use it in all places of the code where a duck would be expected.

With time, the distinction between compiled and interpreted code has gotten blurry, and performance gap tightened over the years. Traditionally interpreted languages, such as JavaScript, use techniques like Just-In-Time compilation nowadays, meaning a fragment of the code is indeed actually compiled before execution, and can even be automatically re-compiled with more optimization tricks, if it executes often.

Some languages, like Java or C#, have also been considered a bit of both worlds – the compilation stage translates their source code to a so-called “byte-code” which is much lower-level, but doesn’t execute directly on the hardware – instead, those simplified instructions translate to one or more hardware instructions at runtime.

While Dartmouth BASIC was a compiled language, it didn’t bring all the downsides of having the extra compilation step before execution — with many other compiled languages of the 80s on home computers, and if we had “only” one computer, and a single-tasking one, probably including the need to exit the compiler, load the program, see it fail, load back the compiler, load our files, fix the problem, compile again… all of this lengthening the update cycle significantly. Dartmouth did better. At any time, when the program was in memory, you could use SAVE to save it from being forgotten when you finish working with the computer, and RUN to compile and execute it right away. The RUN command is familiar to users of our later 8-bit home computer BASIC environments, in which it instructs the computer to start interpreting the code line by line.

It also had the most recognizable feature of BASIC — each line begins with the line number, so even if you don’t have an editor available, you can add lines at arbitrary positions between the existing ones (hence also the convention to number them in increments of 10, rather than 1, 2, 3… — gives you the chance to insert some more code between lines 10 and 20, if needed).

Why would you not have an editor? It’s not that they didn’t exist, even documentation from languages from the 60s mentions a few text editors. The problem was that editors require resources, and for decades computer software really wanted to spare every kilobyte not needed, and leave it free for the user programs. Or games.

The first version of the language was extremely limited, compared to any later popular version of BASIC. The only supported keywords apart from math functions were: LET, PRINT, END, FOR...NEXT, GOTO, GOSUB...RETURN, IF...THEN, DEF, READ, DATA, DIM, and REM. This means very basic flow control, I/O, comments, and basic arrays. The last feature is not trivial, so it was one of the few features that made it more useful than assembly language. . Variable names were limited to a single letter or a letter followed by a digit (286 possible variable names), which made the programs much harder to read (by a human being), than they should be.

By 1975 the language reached its Sixth edition. User input was added, a number of math operators was there (along the lines of ABS, LOG, RND, SIN). Did it allow “normal” (longer) variable names? No trace of such feature. Did it allow full commands (statements) in IF...THEN? Also no! It was only a conditional GOTO statement, meaning you must have wrote it as IF A>0 100 where 100 is the line to execute if the condition is met.

I can see where the rage against the machine running BASIC was coming from when I consider the classical “guessing game” example (the computer picks a random number, and the user is supposed to guess the number, being given hints like “too large” or “too small”):

100 REM GUESSING GAME

110

120 PRINT "GUESS THE NUMBER BETWEEN 1 AND 100."

130

140 LET X = INT(100*RND(0)+1)

150 LET N = 0

160 PRINT "YOUR GUESS";

170 INPUT G

180 LET N = N+1

190 IF G = X THEN 300

200 IF G < X THEN 250

210 PRINT "TOO LARGE, GUESS AGAIN"

220 GOTO 160

230

250 PRINT "TOO SMALL, GUESS AGAIN"

260 GOTO 160

270

300 PRINT "YOU GUESSED IT, IN"; N; "TRIES"

310 PRINT "ANOTHER GAME (YES = 1, NO = 0)";

320 INPUT A

330 IF A = 1 THEN 140

340 PRINT "THANKS FOR PLAYING"

350 ENDsrc: The example comes directly from Dartmouth College.

As you see, there code looks braindead simple, with conditional jumps (IF G = X THEN 300) that require you to jump along, taking your focus with you, and that do not support ELSE statements (in this simple example, an ELSE is realized like in assembly – by omission and just continuing over. If you didn’t get the number right and make the jump in line 190, the number you provided is either too small, in which case you jump from line 200 to line 250, or you just follow along to line 210, because after ruling out the numbers being equal and G<X, the only other option left is G>X.

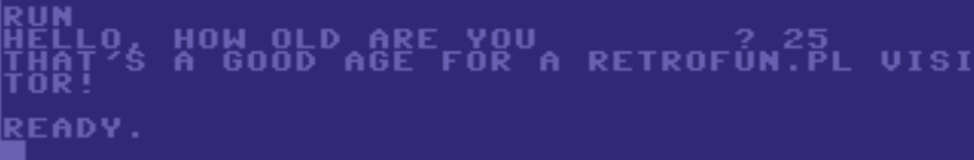

All the code looks very flat compared to today’s standards. This is because most BASIC implementations not only in the 60s and 70s, but also some in the 80s and 90s, did not handle unexpected whitespace very well. You already know the reason why you don’t see indented blocks within IF...THEN blocks… there’s nothing to indent, if you can’t put commands in the THEN clause, and can’t use multiple ones even in the BASIC versions where you can. This is cheating, but let’s peek into 1982 and check out Commodore 64’s code:

10 PRINT"HELLO, HOW OLD ARE YOU",

20 INPUT A

30 IF A > 30 THEN

40 PRINT"THAT'S A GOOD AGE FOR A RETROFUN.PL VISITOR!"

50 ENDWhat may happen if a 25-year-old user executes it is:

While 25 is definitely a good age for a RetroFun.pl visitor, we can see that neither is the line 40 executing only if the condition A>30 is met, nor there’s any error that our THEN block was effectively empty either. Having any code structure is going to be based on GOTO, GOSUB (that’s a GOTO that can RETURN to where it was called from) and more “flat” lines of code.

(Oh, I actually cheated twice – using END that ends the program in a way that may have tricked you into thinking it has anything to do with the IF statement).

One more elephant in the room

There same source provides an example of how to plot a bell curve (amazingly simple to calculate in this math-heavy university-ready BASIC):

100 REM PLOT A NORMAL DISTRIBUTION CURVE

110

120 DEF FNN(X) = EXP(-(X^2/2))/SQR(2*3.14159265)

130

140 FOR X = -2 TO 2 STEP .1

150 LET Y = FNN(X)

160 LET Y = INT(100*Y)

170 FOR Z = 1 TO Y

180 PRINT " ";

190 NEXT Z

200 PRINT "*"

210 NEXT X

220 ENDYou might have noticed, that the language doesn’t have any keywords making it easy to actually plot anything on the screen (as in: light up a pixel), and the example also kept it down to text mode.

But it’s not a missing feature of the language, or rather not a necessary but missing feature. If you asked about it, they would reply with…

What screen?

The elephant in the room is the computer the size of an elephant. Dartmouth BASIC was, like the system it worked on (DTSS), operated from remote terminals, which challenge even our todays definition of a lightweight terminal (you’d assume a simple device with a keyboard and a screen… oh, and lightweight), or a terminal as in the 80s. It’s essentially a heavy desk with a keyboard and a remotely-controlled typewriter (No, not a dot matrix printer). That’s where the “Teletype” name comes from.

Competition (though also considered bad)

Is it a programming language? Yes. Is it a nice one? No. Is it better than the competition? Well, Dijkstra in the same paper referred to PL/I, COBOL, Fortran, APL (a wonderful mix of praise and mockery: “APL is a mistake, carried through to perfection. It is the language of the future for the programming techniques of the past: it creates a new generation of coding bums.“), and FORTRAN (“hopelessly inadequate”).

By the way, if “PL” rings a “PL/SQL” bell for you, yes, there is some similarity between these two, but they are not the same language (the acronym represents different names too, “Programming Language” One vs “Procedural Language” in SQL). Even a simple for loop is a bit different (see below). On the other hand, both languages use keywords like BEGIN and END instead of curly braces, which makes them more similar to each other, but also to Pascal or ADA (the last two are a separate new world we can explore).

-- PL/SQL:

FOR i IN 1..10 LOOP

DBMS_OUTPUT.PUT_LINE(i);

END LOOP;-- PL/I:

DO I = 1 TO 10;

PUT SKIP LIST(I);

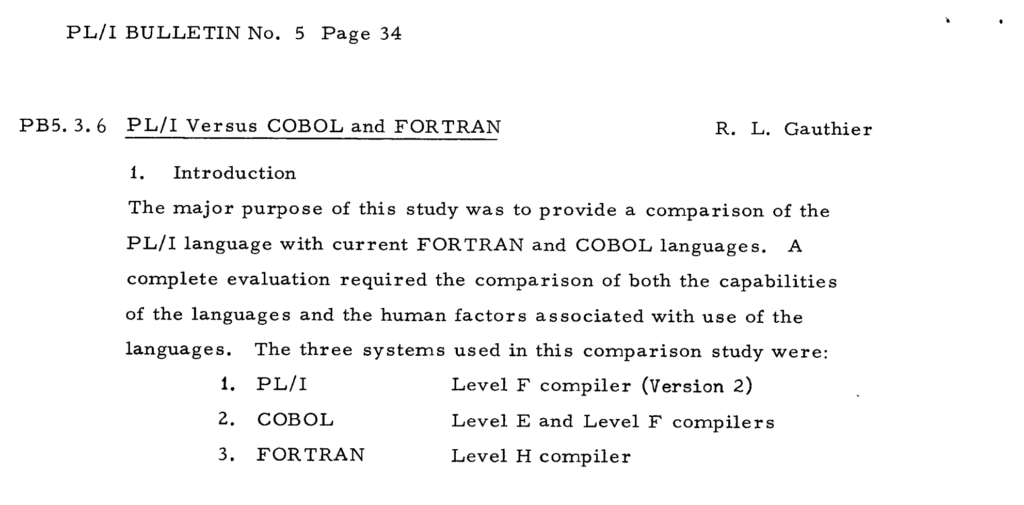

END;Interestingly, a comparison of PL/I, COBOL and Fortran was published in December 1967 in the PL/I bulletin issue 5.

They compared the languages using good criteria – difficulty to learn, and difficulty to use, the latter measured by number of statement needed to achieve a particular goal, applicability in scientific and business cases. However due to the small publication size (a letter to a bulletin) the results are more quoted than presented, statistics for number of statement are given without the source codes. Unsurprisingly (it’s a PL/I bulletin after all), PL/I was considered superior in some, and at least just as good in other areas. It aimed to combine the best features of the other two. In reality it also had problems keeping up with their individual development, which may be the reason of its smaller adoption.

Some PL/I example code from the time can be found in the same issue (see PL/I bulletin archive), but the scan quality makes it hard to embed or quote in a post. However, I looked up an example from October 1976 (page 92), from the compiler documentation, and, in my opinion, it shows how much more structure the code has had (note: indentation, complex if-else, procedures, procedure arguments, and the fact the arguments can have the same name as variables outside, shadowing them – not possible in BASIC at the time). By the way, keywords are case-insensitive.

A: PROCEDURE;

DECLARE S CHARACTER (20);

DCL SET ENTRY(FIXED DEClMAL(1))

OUT ENTRY(LABEL);

CALL SET (3);

E: GET LIST (S,M,N);

B: BEGIN;

DECLARE X(M,N), Y(M);

GET LIST (X,Y);

CALL C(X,Y);

C: PROCEDURE (P,Q);

DECLARE P(*,*), Q(*),

S BINARY FIXED EXTERNAL;

S = 0;

DO I = 1 TO M;

IF SUM (P(I,*)) = Q(I)

THEN GO TO B;

S = S+1;

IF S = 3 THEN CALL OUT (E);

CALL D(1);

B: END;

END C;

D: PROCEDURE (N);

PUT LIST ('ERROR IN ROW ', N, 'TABLE NAME ', S);

END D;

END B;

GO TO E;

END A;

OUT: PROCEDURE (R);

DECLARE R LABEL,

(M,L) STATIC INTERNAL INITIAL (0),

S BINARY FIXED EXTERNAL,

Z FIXED DECIMAL(l);

M M+l; S=O;

IF M<L THEN STOP; ELSE GO TO R;

SET: ENTRY (Z);

L=Z;

RETURN;

END OUT;The language spec from 1965 also documents supporting complex data structures (records), arrays, and arrays of structures. Page 65 defines:

A structure is a hierarchical collection of scalar variables, arrays, and structures. These need not be of the same data type nor have the same attributes.

IBM Operating System/360, PL/I: Language Specifications (July, 1965)

None of this existed in BASIC for decades, and knowing this limitation and the atrocities it would cause a BASIC programmer to commit in their code, can definitely lead to thinking it ruins your chances of teaching yourself the right habits.

COBOL was somewhat similar. It was based on the Flow-Matic data processing language designed by Grace Hooper. In 1950 Hopper became a Systems Engineer and Director of Automatic Programming Development of the UNIVAC Division. She had experience with, and continued her work on compilers, publishing her first paper on that topic in 1952. She then participated in the work to produce specifications for a common business language. Since Flow-Matic was the only existing business language at that time, it also served as the foundations for the specification of the language COBOL (COmmon Business-Oriented Language) which eventually came out in 1959. Her aim was that there should be international standardization of computer languages.

First version of COBOL was published in 1960, while the most recent one – in 2023.

Random thoughts from some COBOL fact-check:

- It’s old enough for the code style guide / format spec to consider punch cards, but that’s not the only option: “Your program can be punched on an off-line card punch or created with an on-line text editor.

- The punch card format is the one that standardized 80 characters per line. Otherwise the examples mention 122 characters per line. Go check your

.editorconfig - Reading COBOL’s manual is as nice, as reading today’s Linux man pages, format is similar as well.

What could make it better than BASIC, and what could have made Dijkstra say it cripples the mind?

Pros, in my opinion: it had record data structures too, it allowed complex and nested IF statements… and that’s about it;

cons: it was very wordy (it’s not necessarily a downside, but VERY wordy) – including but not limiting to always specifying 4 predefined sections (“divisions”) of the code; even if empty, variable declaration looks bizarre for today’s standards; it uses many english words with the goal of being verbose and self-documenting, but the lack of shorter to grasp symbols actually made it considered “incomprehensible”. It was criticized for being driven by commerce and the government, rather than academics. COBOL supported procedures, but they weren’t widely adopted (more people used GO TO statements), and there was no way to pass parameters to a procedure, similarly to Dartmouth and other BASICs, so they weren’t as useful for code readability or maintenance.

This situation improved as COBOL adopted more features. COBOL-74 added subprograms, giving programmers the ability to control the data each part of the program could access. It couldn’t save the name of the language associated with huge monolithic and unstructured spaghetti code, and falling behind in popularity. By 1985, there were twice as many books on FORTRAN and four times as many on BASIC as on COBOL in the Library of Congress.[wikipedia]

Since this post shows code samples in all the languages, here’s a simple COBOL reference:

PRG 11 Write a program to perform the arithmetic operations using Arithmetic Verbs. (Workout with Integer Nos, Decimal Nos and Signed Nos).

* by surender, www.suren.space

IDENTIFICATION DIVISION.

PROGRAM-ID. PRG10.

ENVIRONMENT DIVISION.

DATA DIVISION.

WORKING-STORAGE SECTION.

77 NUM1 PIC 9(4).

77 NUM2 PIC 9(4).

77 TOTAL PIC 9(5).

PROCEDURE DIVISION.

ACCEPT NUM1.

ACCEPT NUM2.

ADD NUM1 TO NUM2 GIVING TOTAL.

DISPLAY TOTAL.

SUBTRACT NUM1 FROM NUM2 GIVING TOTAL.

DISPLAY TOTAL.

MULTIPLY NUM1 BY NUM2 GIVING TOTAL.

DISPLAY TOTAL.

DIVIDE NUM1 BY NUM2 GIVING TOTAL.

DISPLAY TOTAL.

STOP RUN. Fortran IV and 70

Another “bad language”, yet one of the biggest at the time, so still a BASIC competitor in the late 70s:

Fortran –“the infantile disorder”–, by now nearly 20 years old·, is hopelessly inadequate for whatever computer application you have in mind today: it is now too clumsy, too risky, and too expensive to use.

Edsger Dijkstra, How do we tell truths that might hurt?

From a great distance, Fortran has some similarities to COBOL. It was important when it was created in 1957 by John Backus because it was the first widely recognized (and probably second in history, after Speedcoding language from the same creator) more general, higher level language, replacing widespread use of direct assembly programming, which was extremely platform-specific (from the 50s to the 90s, there was a huge variety of CPU families and machine languages the computers “spoke” internally; we then settled on x86 for a while, driven by IBM PC adoption, and we’re diverging into x86 vs ARM today again).

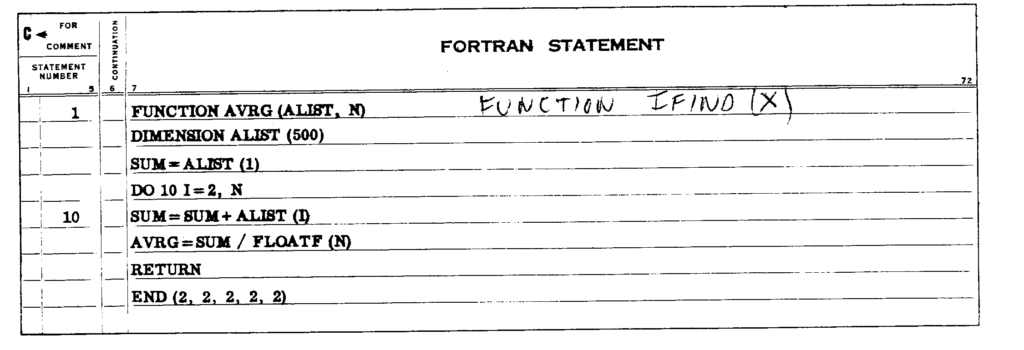

A code sample shared by vajrabum on HN in place of the Fortran 70 example I first included shows what a typical piece of code in Fortran looked at the time:

C AREA OF A TRIANGLE - HERON'S FORMULA

C INPUT - CARD READER UNIT 5, INTEGER INPUT, ONE BLANK CARD FOR END-OF-DATA

C OUTPUT - LINE PRINTER UNIT 6, REAL OUTPUT

C INPUT ERROR DISPAY ERROR MESSAGE ON OUTPUT

501 FORMAT(3I5)

601 FORMAT(4H A= ,I5,5H B= ,I5,5H C= ,I5,8H AREA= ,F10.2,

$13H SQUARE UNITS)

602 FORMAT(10HNORMAL END)

603 FORMAT(23HINPUT ERROR, ZERO VALUE)

INTEGER A,B,C

10 READ(5,501) A,B,C

IF(A.EQ.0 .AND. B.EQ.0 .AND. C.EQ.0) GO TO 50

IF(A.EQ.0 .OR. B.EQ.0 .OR. C.EQ.0) GO TO 90

S = (A + B + C) / 2.0

AREA = SQRT( S * (S - A) * (S - B) * (S - C) )

WRITE(6,601) A,B,C,AREA

GO TO 10

50 WRITE(6,602)

STOP

90 WRITE(6,603)

STOP

ENDIt wasn’t pretty, and relied on line prefixes just as hard as COBOL (with the C for Comment), but also had (optional!) line numbers that you could GO TO, like BASIC — as BASIC was also borrowing that feature from Fortran, not only to provide some kind of labels for where you want to jump in the code, but also to make the teletype line-based editing easier. This means the line numbers that are just jump labels here, were the way to make coding interactive when doing it on-line, for the remotely operated computers.

Fortran 70 released later provided the programmers with more structure, complex and nested conditional statements, SUBROUTINE, FUNCTION statements. However, it tricked me with its name into thinking it must have been popular by 75. This is not the case, Fortran IV and 66 still in use. Fortran 70 was more of a spec that has been worked on, and only in 1978 the version called Fortran 77 was released. So at the end of the 70s we could see some Fortran code replacing GOTOs with nested IF statements:

PROGRAM C1202A

INTEGER YEAR,N,MONTH,DAY,T

C

C CALCULATES DAY AND MONTH FROM YEAR AND DAY-WITHIN-YEAR

C T IS AN OFFSET TO ACCOUNT FOR LEAP YEARS

C NOTE THE FIRST CRITERIA IS DIVISION BY 4

C BUT THAT CENTURIES ARE ONLY LEAP YEARS IF DIVISIBLE BY 400

C NOT 100 (4*25) ALONE

C - CORRECTED 14/3/12

C

PRINT*,' YEAR, FOLLOWED BY DAY WITHIN YEAR'

READ*,YEAR,N

C CHECKING FOR ORDINARY LEAP YEARS

IF(((YEAR/4)*4).EQ.YEAR)THEN

T=1

IF ((YEAR/400)*400.EQ.YEAR)THEN

T=1

ELSEIF((YEAR/100)*100.EQ.YEAR)THEN

T=0

ENDIF

ELSE

T=0

ENDIF

C ACCOUNTING FOR FEBRUARY

IF(N.GT.(59+T))THEN

DAY=N+2-T

ELSE

DAY=N

ENDIF

MONTH=(DAY+91)*100/3055

DAY=(DAY+91)-(MONTH*3055)/100

MONTH=MONTH-2

PRINT*,' CALENDAR DATE IS ',DAY,MONTH,YEAR

ENDThe syntax is slightly more reasonable when we, once again, see how much older the language is, and how much more paper the default code format was.

You can see the line numbers being just an optional “statement number” here at the time, to make it easier to refer to the statement when jumping to it from another part of the program.

Looking at PL/I, FORTRAN and COBOL, however, we see that even the literally earliest programming languages in existence had more structural programming features than BASIC did (defining functions with own arguments, nested complex conditional statements).

These languages were not all that was on the market in the 1970s, but they were indeed the most popular ones! We will dig into the emerging alternatives separately.

From the most popular ones, FOTRAN, COBOL and BASIC all were still more like syntax sugar on top of assembly language.

To mention the choices that would be more obvious in the next decades: Niklaus Wirth designed Pascal in 1970. By the end of the decade it got significant popularity. Similar growth has been seen for C.

The 80s/90s

What changed?

Some years have passed, and technological progress began to made computers smaller (than the room they are in), and smaller, and affordable to more than just the government, universities, and biggest companies. The home computing revolution started.

The Steves and Ronald Wayne soldered together their Apple computers in 1976, followed by insanely popular (in the US) Apple II in 1977. These had 4kB of RAM, a CPU running at 1 MHz, and were sold well under $1000. Apple I was at “affordable” $666 in 1976, bear in mind this would be $3600 in 2023 (via inflation calculator).

1977 was the yearn Atari 2600 was released, popularizing computer gaming at home, something that was revolutionizing the electronic entertainment industry, that used to thrive in arcades with custom-built, and quite amazing, arcade game machines.

The 80s opened a sack of wonder and miracle: the TRS-80, Commodore VIC-20, and ZX-80 came out in 1980. ZX-81 improved on that in ’81.

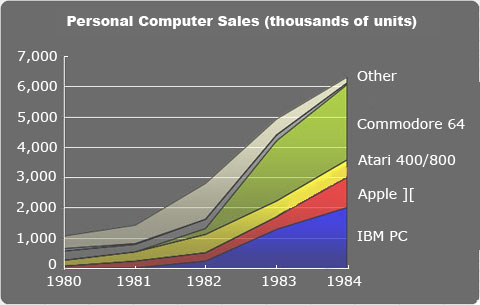

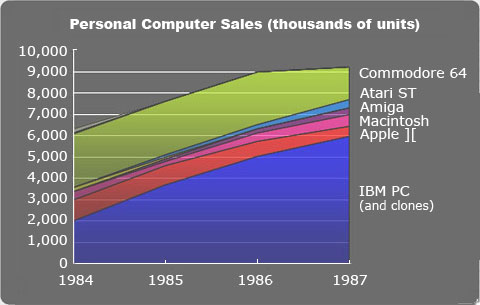

This is when the home computers remembered most fondly, and that redefined the market for decades, started appearing, starting in 1982 and peaking around 1985: listing them in their 64kB/128kB pairs, they were Commodore 64/128, Atari 65/130XE, Amstrad CPC464/6128. Even the graphically mesmerizing MSX and Amiga 500 came out by 1985! You can see this is also where the sales slowed down (reasons for this are also a post-worthy material), and the IBM variant of “a personal computer” became “the personal computer”.

We don’t say “personal computer” today anymore, it’s just a computer. But this wasn’t always so simple. As you’ve seen the terminals, huge desks with an automated typewriter, connected to a multi-user time-sharing mainframe… computers were an academics supertool for the previous two-three decades. It wasn’t until the 80s that they appeared at many homes, and have users that are not scientists, engineers, or corporations.

John Smith writes his first program

Ok, back to BASICs! As you can see, this is where the possibility of computer programming appeared to regular people, to regular computer users. The average ZX Spectrum, Amstrad, Atari and Commodore, as long as they had a built-in keyboard, greeted the user with the amazing “READY” prompt of the BASIC language, ready to be programmed within a second from powering up!

The needs for a programming language for the regular Jane Doe were a little different than for a scientist-computer from the 60s (it’s often said that we sent human to the Moon with a computer with less power than a modern calculator, but it were the women at NASA who were the computers that sent human to the Moon!)

The need for something easy to use, simple, less academic, and not crippling the mind 🙂 placed BASIC as topmost candidate. The language was also simple enough to be interpreted, thanks to having a limited number of keywords, and no structure/variable scope to track (all variables are global).

The user experience

It’s not even a “developer experience”, as the 8-bits from the 80s booted straight into BASIC, encouraging all users to begin their adventure with programming.

Many great programs can be created in BASIC – examples include even submarine fleet control software.

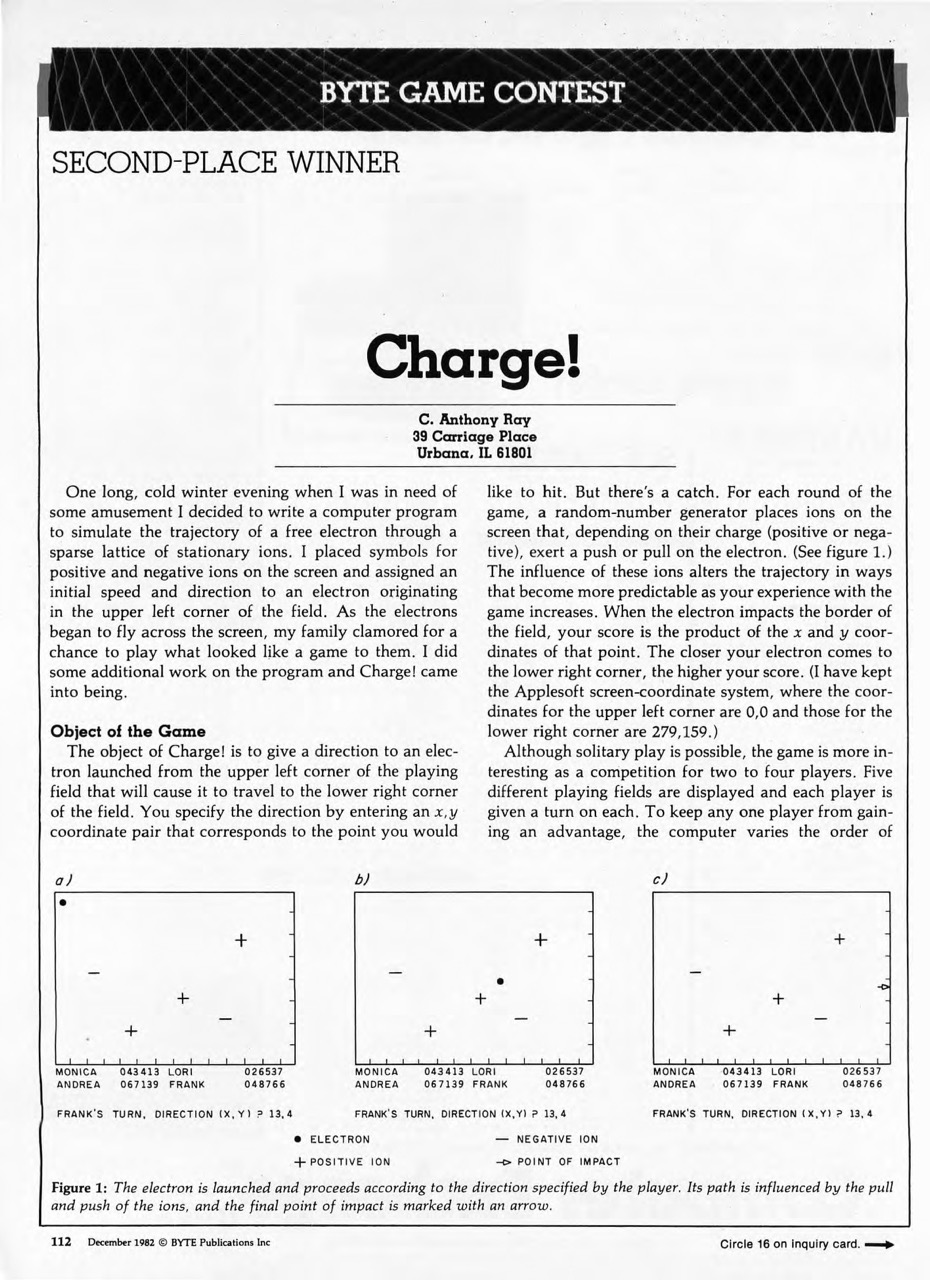

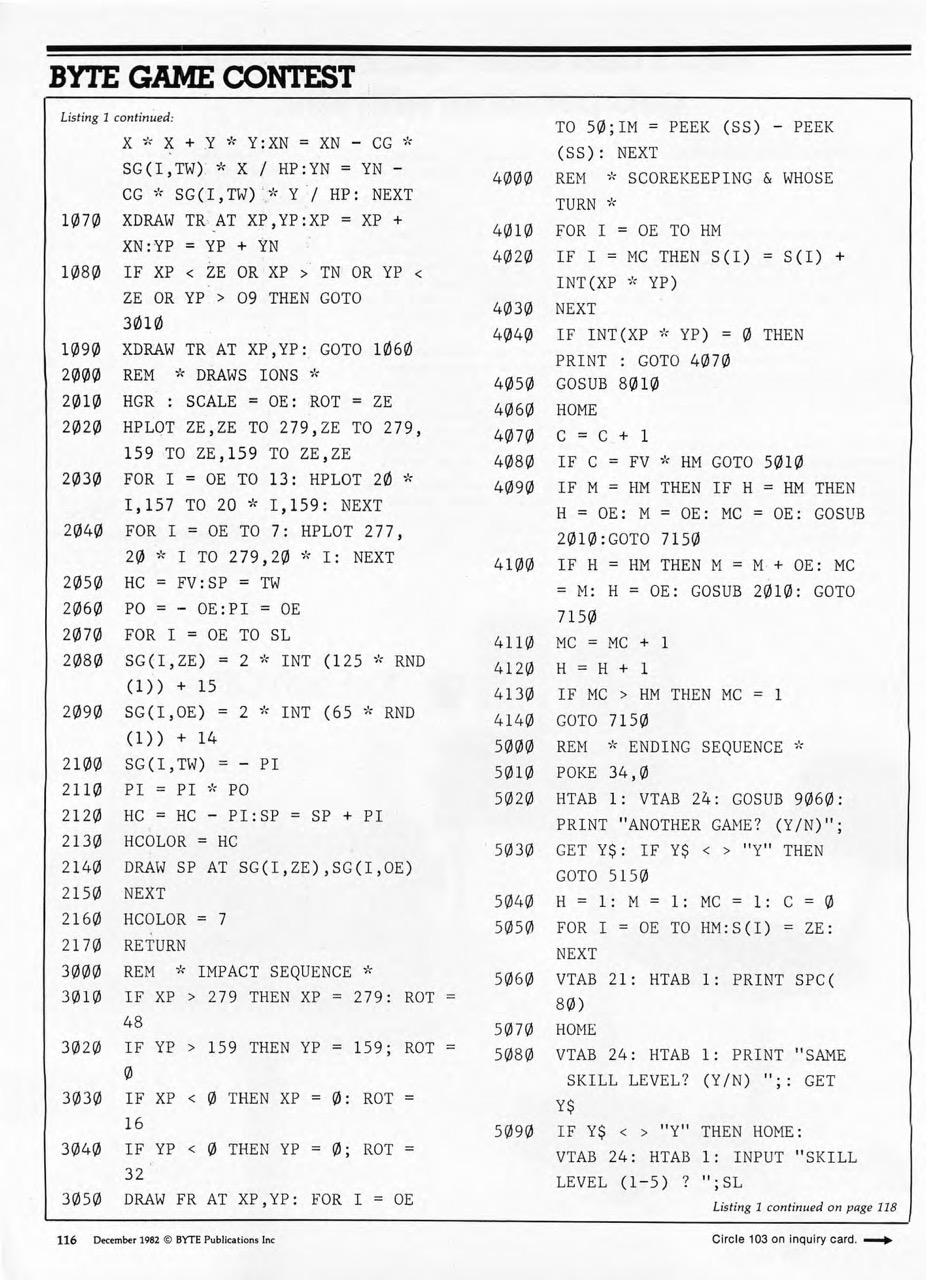

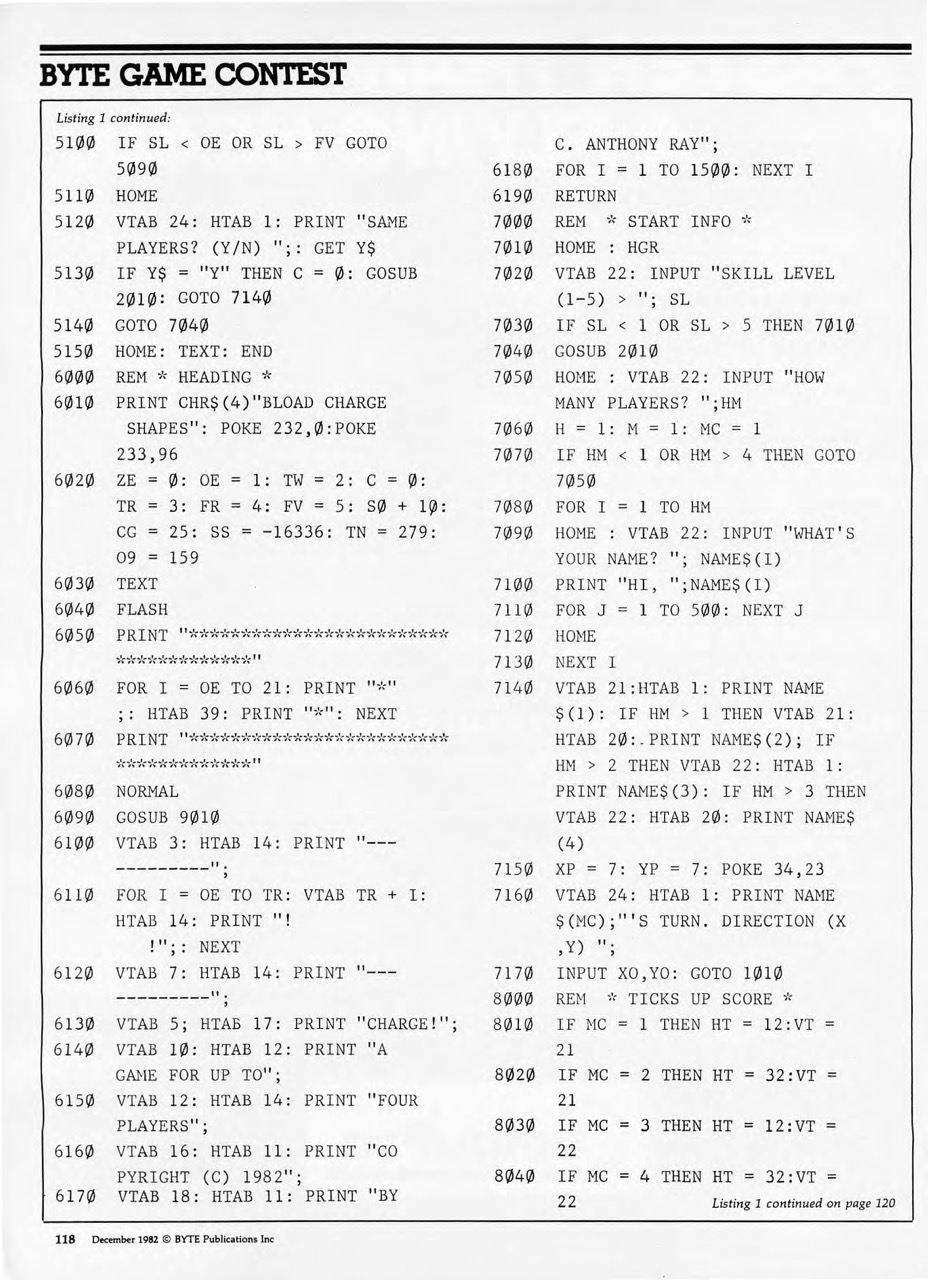

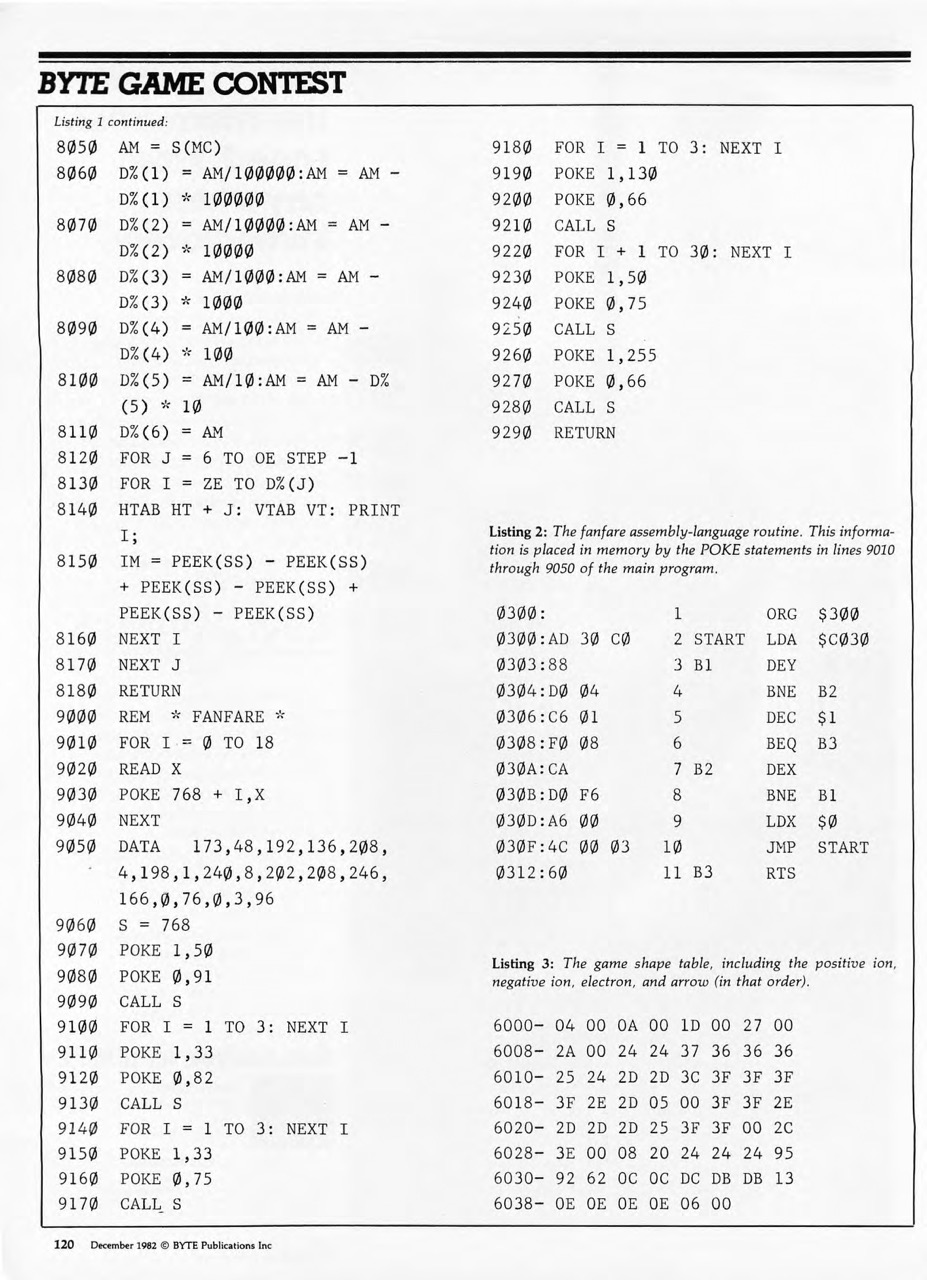

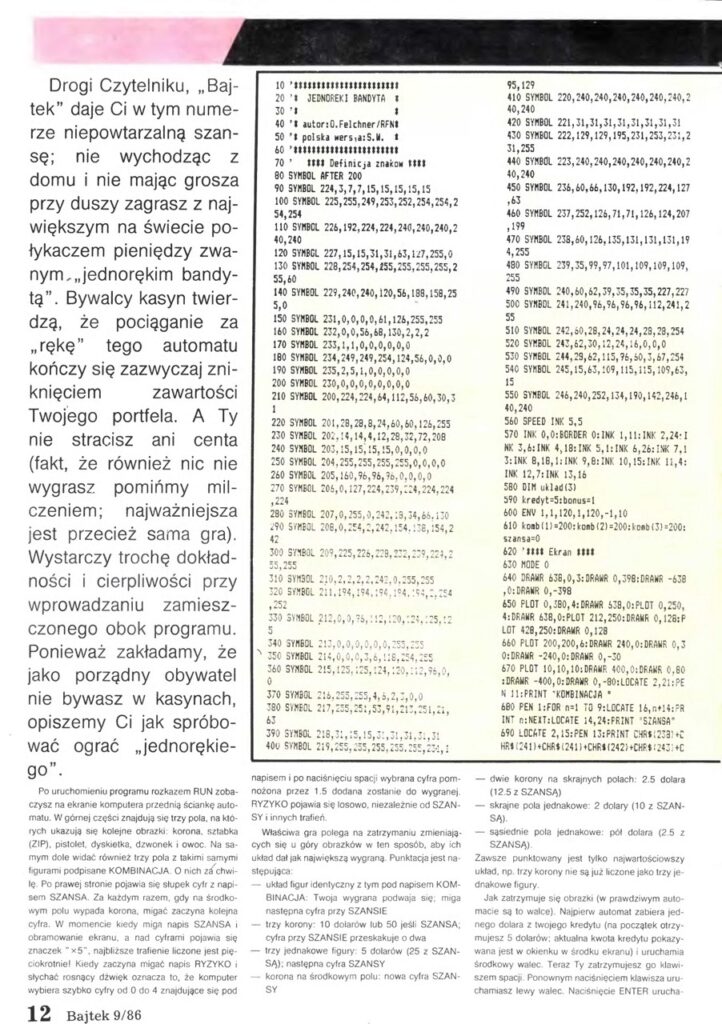

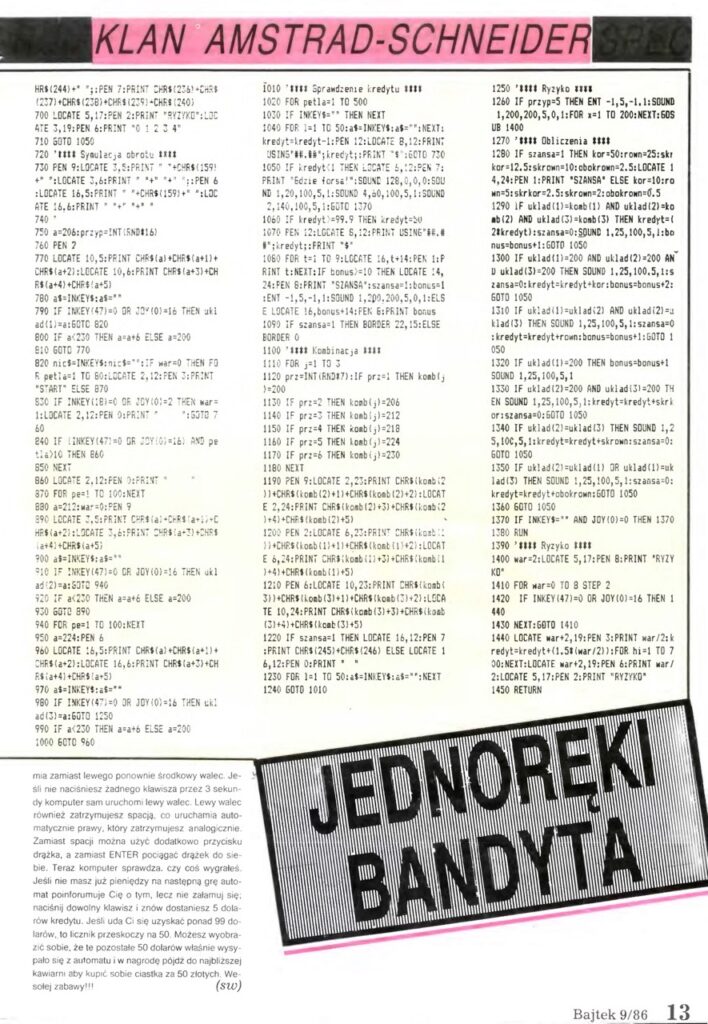

The best proof of how much of the educational and fun value you can create in BASIC is the popularity of type-in programs and games in these decades. Having so many home computers readily booting into a simple programming language command line and interpreter made it possible to popularize simple coding. Computer magazines popular at the time, like Byte in the US and it’s smaller cousin Bajtek in Poland (we’d need to confirm this, but since “Bajtek” – pronounced byte-æk is – means a “cute little byte”, so it’s a perfect reference both to computing, and to the American magazine), printed so-called “type-in programs”, meaning you could literally type them in straight from the magazine into your computer, in reasonable time, and have some fun with it!

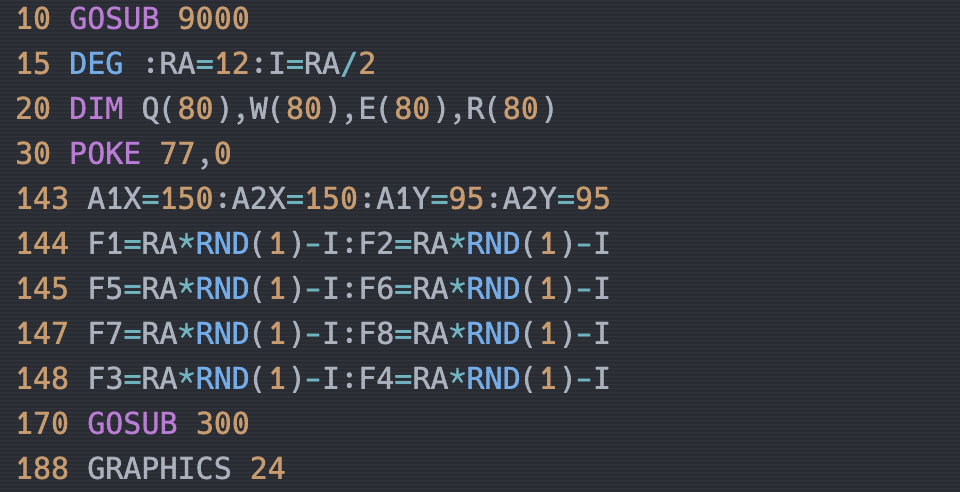

Some type-ins were utilities, like physics calculators or word counter for writers. Others were games. Here’s an example type-in game from 1982’s BYTE magazine:

Or a slot machine one from Bajtek:

Let’s add a mental note: a medium for transferring computer program? Paper.

In another Byte issue, June 1982, the magazine touches on an important topic – lack of standardization. Even though the works on the standard defining what the language should provide started in 1970, only in 1983 a proposal appeared (Byte 02/1983).

Why still use line numbers?

It’s popular to hate BASIC for the use of line numbers, a feature very visible here, and very frowned upon if you started with a more powerful computer. But let’s recollect the facts again, to see why it would actually make sense:

- It allowed to add a line to a program in memory – without saving it to a tape deck, or if you bought one of the more expensive computers or add-ons, a diskette. You could edit arbitrary line numbers without sacrificing a good part of your 16, or 48, or 64 kilobytes of RAM for a text editor.

- They still served as good

GOTOandGOSUBlabels to jump to – a set of text labels would use much more space in the computer memory, a luxury that was very expensive at the time.

Even the keywords, represented asPRINTorINPUTon the screen were stored as single-byte tokens in memory, to save space. That’s material for another article. - You could mix editing a program with interactively checking if your command works – something you wouldn’t be able to do if your computer was now in a text editor mode (8-bit home computers were not organized around multitasking – you usually worked with one piece of software at a time).

Let’s say you were coding a simple game, and wanted to add a sound when something happens. You wrote the code up to the reaction you’re adding, but you want to test if yourSOUNDcommand sounds good. You could just omit the line number to get it executed immediately. You got a preview, you were content with the result, you added it to a program. - It definitely helped not getting lost when typing in code from a magazine or a book either! Plus, having text editors at the time not numbering lines for you, having the ability to say “please look at line 230” when discussing code with someone else was actually helpful in this context.

But how good was it, and how easy was BASIC to use to solve a problem?

I decided to check again myself, and started doing a few of this years Advent Of Code challenge tasks in BASIC. To make it not require even an emulator, I decided to pick a contemporary BBC BASIC, but limited myself to the features I remember having on an Amstrad CPC6128 (or so I thought). So by this time each user could do IF ... THEN ... <more than 1 statement> ... END IF, for example. And we could have variables of any name length (however some sources hinted that the one-letter ones are the fastest, because they have a static memory address; was it true?), so it shouldn’t be so bad, right?

It was horrible.

Solving challenges from day 1 (solution) or day 2 (solution) was fun, even – or especially – when trying to be fairly memory efficient, and a little dirty on the approach. But then comes day 3, where we work with 140×140 matrix of characters. Some of them form numbers, some are symbols, everything else is filled with . as blank space.

Task one is to find all numbers that are adjacent to a symbol (next to it, above or below, or diagonally). This can be done, as one possible way, by loading into memory 3 lines at a time, and processing the middle one as the “current” one, and scrolling your way through the dataset without ever keeping more than 3 lines in memory. Then, keeping in mind the lack of more advanced string manipulation functions like “split”, “find” or “indexOf”, not to mention regular expressions, we can process such line character by character. If it’s a digit, we’re inside a number. Update the number digit by digit (n=10*n+digit), add to know numbers when we’re out of digits, and check for adjacency of a symbol.

It all sounds simple, if you can abstract your logic into functions, or some kind of smaller modules. But once the code grows a little bigger, and due to lack of memory, utilities, and all that stuff, you want to keep a few more flags for your happy little state machine, things get complicated.

The day 3 solution that works is 210 lines long. In a 2020s IDE, and a 20XXs programming language, that’d be small and easy to maintain. But at that point, having all variables global makes them hard to track and reuse correctly, not being able to have functions or procedures makes it harder to know inputs and outputs (so GOSUB solves only half of the problem), and it feels like you spend more time on the lower level end of each operation, than on actually solving the main problem. When I tried to run it with a BBC BASIC for CP/M, it also turns out even the part of reading one line from the file would need a rewrite to a more low-level byte-by-byte approach, as the GET$#... TO ... keyword is not supported.

The determined coders

These difficulties don’t mean sophisticated programs or games couldn’t be created for the computers in their native BASIC! The demo program of Amstrad CPC is written entirely in BASIC, except for the “Roland in Time” game fragment, showing off graphics, music, spreadsheet and word processing functions.

Or checkout a more modern (2006) demo, but written entirely in Atari BASIC for Atari XL/XE (feel free to use 2x speed):

These demos feature something you haven’t seen before! Graphics (and sound)! Less or more advanced, but we see things in color, we see them animate, and in some cases we can also hear sounds and music. This would not have been possible in the dialects of BASIC designed to run remotely and print on a teletype. By moving the computer physically into the user’s room, the industry opened the door to multimedia and more dynamic entertainment.

Note: not all BASICs at the time had graphics keywords. Commodore 64’s, for example, did not, but some BASIC-coded graphic effects could leverage the way it printed text characters in color, and that it could support sprites – which were graphics that could be overlaid on top of regular screen content without extra copying operations!

I’m an artist!

Enabling interaction richer than text prompts and answers is more than just that technical difference, more than an item on the spec list.

Every language that makes it easy to create art, opens up your creativity, invites for experimentation, and gives you control and means of expression.

And it did just that, two seconds away from toggling the POWER switch. Many examples, including type-ins, were combining mathematical functions and PLOT (draw a point) or LINE (draw a line) for surprising, mesmerizing artistic effects. Below is a (10x speedup) capture of “Crystals” demo written in Atari BASIC on Atari XL/XE.

These capabilities, allowing one to create procedural art on their own, are what I think the biggest advantage and the biggest impact of the BASIC language on average computer user. The full source code isn’t even big, see the full listing under the link below:

The magic of visual programming is what can be attractive to a non-nerd, and what introduced basic coding to masses in the 80s and 90s.

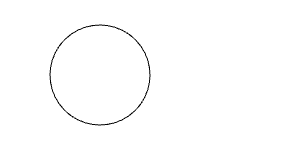

I have put this risky hypothesis in the posts title: that BASIC might have done something better than most languages. This is the thing better about BASIC – easy access to the computer’s fundamental graphical and musical capabilities. The commands were simple, yet the simplicity invited experimentation, and building upon them. Manuals for computers at that time also often taught you the math behind some shapes, explaining how and why a circle can be expressed as (x, y) = r*sin(x),r*cos(y), and therefore how to draw it with a LINE.

Of course, modern and widely popular languages support all of that too (why would you remove something that works?), one of the closest examples in terms of simplicity and popularity would be JavaScript canvas. Sample code and effect on the left for JavaScript, right for BASIC:

const canvas = document.getElementById("myCanvas");

const ctx = canvas.getContext("2d");

ctx.beginPath();

ctx.arc(100, 75, 50, 0, 2 * Math.PI);

ctx.stroke();ORIGIN 100,75:r=50

FOR i=0 TO 360:PLOT r*sin(i),r*cos(i):NEXT

The BASIC example seems simpler, even though it doesn’t have a keyword/function for arcs or circles, and is a result of typing in the code on the right directly after booting the 8-bit computer.

The example on the left, smoother, higher resolution, and much faster to paint, is on the other hand longer, and… incomplete. It refers to an element myCanvas within a HTML document that is not even part of the example (so we need at least one line more, somewhere, and a web browser).

Most languages will have many ways to paint things onto the screen, and there will be dozens of libraries to choose from – this is both for the better or worse. If the language doesn’t support something with the built-ins, it means both flexibility and the need to find the best tool for the job, a way to refer to it, possibly install or bundle with your program, and so on – these problems don’t exist if you work with a simple, but highly integrated environment. You could say BASIC helped to learn the basics. Once you master that, you usually want to move on to a more powerful toolkit no matter what the machine is.

Edit 2024-01-19: Gallery of awesome

One of the best examples of what you can do in BASIC on a very simple, very old computer is the BBC Micro Bot gallery. It’s a gallery of short (fitting within a single toot!) BASIC programs rendered live in the browser by a BBC Micro computer emulator, most of them presenting really impressive graphic feats.

Examples include a famous raytraced scene:

Or an amazing high-FPS effect:

Some of these, of course, take hours to prepare or render, which is zapped into the future by clever magic of the emulator on the site – so we see the final effect (though in real time again).

Today

This gives us two aspects to look at “today” in:

- How is BASIC doing today? How are competitors doing? What other languages are the most popular? 🙂

- What gives similar user experience today, but is more modern, easier to use, and just as fun?

The others are not dead yet

Surprisingly, none of the languages mentioned previously for the 70s, 80s and 90s is dead.

- PL/I is still supported and maintained by, as it always has been, IBM. Here’s a PL/I manual from 2019.

- Nordea is still hiring COBOL developers. Desperately, I presume.

- Fortran is alive and somewhat kicking. Latest spec is Fortran 2023.

- APL is more a curiosity – a language where a valid statement may look like

{↑1 ⍵∨.∧3 4=+/,¯1 0 1∘.⊖¯1 0 1∘.⌽⊂⍵}is not easily adopted, and gets the hearts of hobbyists and mathematicians more than the average programmers.

The top 10 programming languages have changed since the 70s (or 80s). According to IEEE’s ranking for 2023, the top 10 languages are: Python, Java, C++, C, JavaScript, C#, SQL, Go, TypeScript, and… HTML (huh? the name literally says it’s a Markup Language). Notably, C, which started in 1972 but was officially published in 1978, couldn’t be criticized by Dijkstra at the time. Visual Basic is ranked 24th, as Microsoft decided not to further develop the language in 2020. Fortran remains at 27th place, COBOL at 34th, and Ada at 36th. Lastly, Pascal/Delphi is mentioned at 45th place.

Neither is BASIC

BASIC didn’t stop in its evolution, of course, either. These show the language is still valued for simplicity and ease of use with hardware. Notable mentions include:

- The QuickBASIC that was included in MS-DOS, keeping it possible to easily access a basic programming language.

- Visual Basic (with Visual Basic .NET, VBScript, and office packages scripting languages such as Microsoft’s Visual Basic for Applications and OpenOffice Basic that evolved from this one) – Microsoft’s continuation of the BASIC lineage over the years. You could trace its evolution from a beginner-friendly language to a full-featured professional development tool. However, in 2020 Microsoft announced there is no further development of the language planned.

- FreeBASIC. This is an open source project, and very feature rich.

- BBC BASIC – this one has a long history! It’s an evolution of the language created for the BBC Micro computer in 1981. Supports a massive amount of operating systems today (from 80s CP/M computers, through Raspberry Pi, to Android and iOS). The site is also strong on the documentation side.

- SmallBasic by Microsoft – intended to learn programming, “even by kids”, which actually suggests it may focus on the simplicity of “fun” features that BASIC brought. It also brings in turtle graphics, a concept introduced by the language LOGO, which brought a new, relative, approach to drawing things on the screen.

- BASIC dialects such as B4X can even run on microcontrollers – Versions of BASIC have been created for Arduino and other microcontroller boards, bringing back the spirit of early PC BASICs.

- PBASIC – A commercial BASIC variant created specifically for Parallax’s BASIC Stamp microcontrollers. Known for its accessibility and approachable documentation.

But what would have the same effect today?

While the BASIC name may not have the popularity it once held in the home computing era, the language’s legacy lives on in various forms today.

A great place to start and see immediate interesting effects with little code would be the Processing language (also available in JS as p5js). They provide simple APIs for drawing graphics, animations, and visualizations in an immediate way reminiscent of classic BASIC interpreters. Take a look at the example: Recursive Tree / Examples / Processing.org – the language has features that BASIC was lacking, allowing you to properly structure the code, and it has super easy to use commands to draw things on the screen, or even render 3D objects.

Another neat example with some sound, a simple concept, yet playful: p5.js Web Editor | BUBBLE WORDS (p5js.org)

The notion that Processing is an example of is called “creative coding”, and Processing is as good at it as BASIC was in the 80s. Check out the Bull’s eye demo below, or Floating In Space:

I would highly recommend it for creative and fun experiments, but there are other options, too, of course. Let’s mention at least a few.

Scratch, the colorful block-based programming language designed for kids, carries the torch of BASIC’s legacy perhaps better than any other modern tool. By using visual blocks that snap together like puzzle pieces rather than typed syntax, it removes a major early barrier to coding creativity that existed even in simplified BASIC versions. Themed graphics, animations, and sound libraries make exploration even more fun for budding young programmers. Just as BASIC and early home computers created a gateway for many tech pioneers, Scratch aims to foster that same experimental spirit, no matter a child’s prior access to technology or education. Its online community also connects peers to share and remix projects – a markedly more social approach than solo BASIC tinkering of the past.

If you’re more comfortable in programming in general and don’t hesitate using more commands to achieve your result, as the cost for more flexibility and portability, consider JavaScript – While not strictly BASIC-derived, JS has a very loose, dynamic style that echoes some qualities of the language – especially in the browser, where it can easily access multiple ways to manipulate text, graphics, and sound. The fact that it’s so widely used for interactive web apps connects to BASIC’s interactive nature and its past widespreadness.

Edit 24.12.2023 – As todd8 on HN mentions, one of the best other options for starting programming and trying out solving any coding problems – whether it’s something simple, or more complex, a coding challenge, a game, or home automation – is Python.

I have used Python as my main programming language intermittently since the beginning of the part of my life where it actually became my career, including a few years of python-only focus, and this is definitely a language that takes the “how” (to do the operation you want on your data) out of the way, letting you focus on the “what” (you are trying to do, or build). It has some syntax sugar that may seem “magical” to a newcomer, like list comprehensions, but after a while, getting a good feel of what is idiomatic in Python lets you write very clear code that achieves a lot within just a few, or one, lines of code – and still is perfectly readable!

If you’re new to programming, and the creative (visual, audible, artistic) part is not your focus, Python would be my recommendation. If you’re not new, the answer may still be Python 🙂

None of them would greet you on the screen two seconds after pressing the power button, though. Having this experience, a computer READY for commands, within seconds, a distraction-free environment for experimentation (link to the opening post that made me start RetroFunPL), was more encouraging than finding the right language, editor, browser, directory on disk, or installing python/anything else outside of the browser, if it’s all new to you.

Now and then

While the BASIC name may not have the popularity it once held in the home computing era, the language’s legacy lives on in various forms today. Modern tools like Processing and p5.js for creative coding projects have inherited BASIC’s focus on accessibility and rapid visualization for beginners. They provide simple APIs for drawing graphics, animations, and visualizations in an immediate way reminiscent of classic BASIC interpreters. Scratch carries on BASIC’s mantle for introducing young students to programming in a fun and intuitive environment.

Even outside the realm of purely educational tools, JavaScript itself, despite no direct lineage from BASIC, has a flexible, beginner-friendly coding style that echoes some of BASIC’s most famous qualities. The fact that JavaScript powers most interactive websites and web apps today mirrors how BASIC enabled new realms of software interactivity in the early PC era. And for those yearning for BASIC’s glory days on microcomputers, modern microcontroller boards like Arduino often have custom BASIC interpreters and compilers created by the community to control hardware projects. So while it evolves across new platforms, BASIC’s accessibility and focus on rapid iteration persists in the DNA of many modern coding tools.

Edit 24.12.2023: Many discussions around “BASIC is bad” are also revolving around “GOTO is bad”. Yes, an unconditional jump to any place in your program may be bad if you violate the boundaries of the code structures, like jumping from one function to another. But in the cases we speak of, there are no structures.

One can also argue, and many people do (if I understand zozbot234’s comment on HN correctly), the problem is just as well on the “receiving end” – if you can jump to any line in the code, it’s hard to know if a given line is a target of such jumps, or where they come from. This is a true problem when reading the code, that functions, procedures, and classes solve neatly.

While Dijkstra’s inflammatory criticism of BASIC was controversial, his quote sparked discussion that influenced the growth of computer science education and programming language design. The history of BASIC illustrates how strongly opinions can differ regarding the best way to balance simplicity and power when creating tools for novice programmers. In its early days, BASIC favored ease of use over advanced capabilities, though over time it evolved by incorporating more features without compromising approachability. Modern BASIC dialects aim to offer a gentle starting point along with capabilities to take on more complex coding. There are still debates around finding the right equilibrium to serve programmers across the skill spectrum. However, the differing perspectives pushed the field forward. In the end, a diversity of languages can coexist, fitting different needs. The intensity of Dijkstra’s viewpoints sheds light on how passionately programmers care about building the best systems for their peers to create software magic and unlock human potential. While his criticism was extreme, it opened valuable dialogue.

See also

Dartmouth College documentary about BASIC for the 50th anniversary (2014) highlights other key features of the system, such as time-sharing (BASIC was actually used concurrently by multiple users!)

BASIC

- Example Dartmouth BASIC manuals: first and 4th edition of the language:

- BASIC Type-Ins by Sean McManus: Amstrad CPC 464 664 6128 Basic programming tutorial and games. The Basic Idea (sean.co.uk)

PL/I

- PL/I Bulletin archive at teampli.net

- If you’re curious how PL/I was used in Multics source code, here’s a sample utility.

COBOL

- Some friendly COBOL examples with modern terminology here: 7 cobol examples with explanations. | by Yvan Scher | Medium — I do not condone closed platforms like Medium, Yvan says he moved his blog to his own domain, but that domain doesn’t work anymore.

Processing

- Discover – OpenProcessing – example gallery to get inspired

[…] Article URL: https://retrofun.pl/2023/12/18/was-basic-that-horrible-or-better/ […]

“limited to a single letter or a letter followed by a digit (286 possible”

How do you get that number?

10*26 (each single letter followed by a single digit 0-9) + the 26 letters by themselves. 260+26 = 286.

Nice 🙂 I wrote about it not long ago – in a much shorter form than this. Though I’d be happy if the reality would allow me to publish things of such a volume and depth, rather than condensing stuff so much as I do.

Yes, I’m going against the current here with a few things – like hosting my own WordPress rather than posting on social media, announcing the post on Mastodon (RetroFunPL@8bit.red) rather than other social media… and of course assuming that people who can still read a longer piece that tries to go through a topic, without getting bored, still exist.

It did cross my mind that if I ever feel confident enough to post it as an item on Hacker News (someone else did that for me:)), that would be a kind of audience that *would* be able to read it.

And did the reality allow…? The original post creation date was November 18, and it took me a month of trying to do my research after between after-work and house duties to get there. I am generally more happy with posts like this or the one about 8-bits that started the blog, than the short news/discoveries ones. One day I’d like RetroFunPL (the blog or the YT channel) to be a cool place to visit for something fun at least remotely related to the 8-bits 🙂

By “reality” I meant my publishers 😉

I would not only allow, but also appreciate! Wanna publish something on the super niche RetroFun.PL sometimes? 🙂

https://yvanscher.com/writing/7_cobol_examples

Thanks! Looks like a good place to start getting ready to be a superhero for some large Scandinavian bank one day!

I am a curator for a retro computing museum (Vienna, Austria) and this year set up an operational 8-bit computing exhibit where visitors are encouraged to touch and engage with the machines (the machines still work great) – and one of the key things we promote in the exhibit, is the 10-LINE BASIC PROGRAMMING COMPETITION, which not only serves to fulfill multiple purposes of the museum (games, fun, retro) but *also* introduces people to BASIC programming, as it was, and as it still is.

On 40-year old machines which still work great.

So I think in the end the proof is in the fact: BASIC is just a great way to introduce someone to a computer. The immediate interactive nature of it as a system interface puts it in a separate class from most other languages – although of course many of us are familiar with a REPL or Lua console, etc., to the average new user (which these systems were designed for), BASIC is a perfectly cromulant interface.

I see teenage kids sitting on the machines, hacking away on the 10-line basic entries we have in place (Oric ATMOS forever!!!), laughing and engaging, and getting lost in the mysteries of it, and digging themselves out – just as I did, in 1982, as a 12 year old, also.

Very, very few other languages can deliver that. Therefore BASIC has its place. And those whose brains have become irretrievably discombobulated by BASIC, such as Djikstre et al., simply need to watch the kids. They’ll *always* show us the truth.

Turbinado � raw cane sugar with a golden crunch.